Design and Implementation of an Autonomous Smart Food Delivery Robot for Commercial Environments

Received: 29 August 2025 Revised: 23 September 2025 Accepted: 10 December 2025 Published: 16 December 2025

© 2025 The authors. This is an open access article under the Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/).

1. Introduction

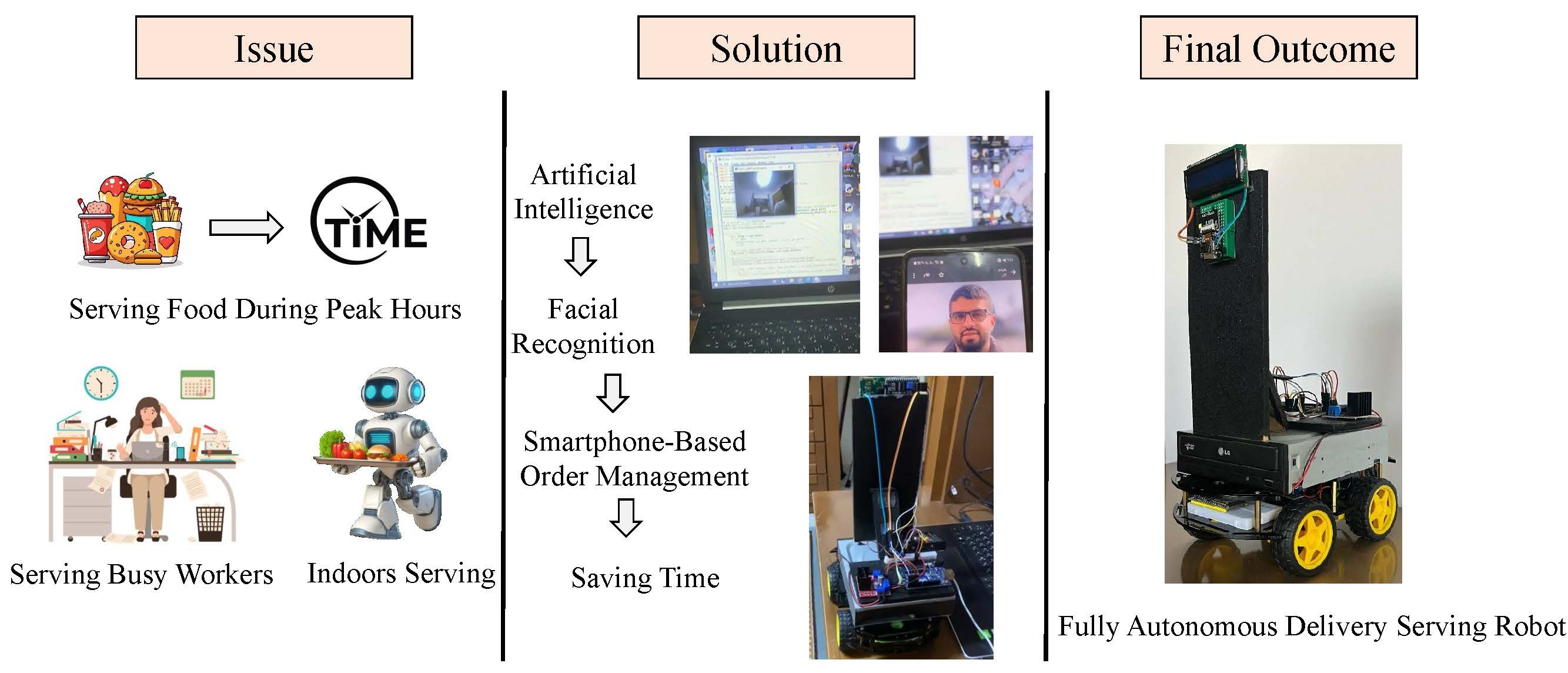

Robotic advances have radically altered industries and everyday lives by automating processes previously handled only by humans [1]. No doubt, delivery services are among the sectors experiencing significant innovation. Increasingly, university campuses, hotels, and restaurants pay attention to convenience, efficiency, and cleanliness. Several challenges, including crowding, a shortage of employees, and the implementation of contactless services, have been identified as being addressed by delivery robots [2]. As a result of the combination of autonomous navigation, artificial intelligence (AI), and wireless communications, these systems are capable of performing tasks with superior accuracy and speed.

In recent years, a number of studies have been conducted exploring different aspects of delivery robots. These studies contribute to the growing body of knowledge in this field. China has conducted research to develop a low-cost indoor navigation system that combines ultra-wideband positioning technology with odometer and inertial sensors to enhance indoor localization accuracy [3]. A prototype robot was developed in Bangladesh during the COVID-19 pandemic to reduce health risks associated with product delivery using GPS-guided autonomous movement [4]. A robot called MedBuddy has been developed in India, which can be controlled via Bluetooth and is equipped with a live camera feed that can be accessed via a smartphone [5]. Furthermore, a study conducted in Villupuram introduced a wireless waiter robot equipped with a Bluetooth-enabled mobile interface and an indoor navigation system based on radio frequency [6]. Iraqi researchers have used a similar methodology to automate line-following robots for use in food service environments using an Arduino board and a Bluetooth module [7]. Delivery robot behavior in shared spaces has been studied in India, Egypt, the United States, and Saudi Arabia in recent years. A key aspect of improving user experience is social presence and interaction between humans and robots [8].

A number of autonomous delivery robots have been deployed internationally in recent years [9], including those developed by Starship Technologies [10] and the Co-op [11]. Several studies have demonstrated the feasibility and acceptability of robotic delivery systems for delivering groceries and parcels [11] in urban and campus environments.

The research of Hossine [12] explored how self-driving robots are transforming the delivery of food, groceries, and packages throughout urban areas. He explained that companies such as Starship and Nuro are deploying robots all over the world, with some responsible for handling millions of deliveries, while others offer unique features such as autonomous loading and unloading. According to Kim [13], cooperative AI research is focused on developing socially capable agents that can interact with humans and other agents in dynamic environments. The author introduces Coop Tile World, a custom environment designed to test human-agent cooperation, revealing how task difficulty and agent alignment affect social interaction and outcome. Stubelt [14] emphasized the importance of e-commerce growth in driving advancements in autonomous last-mile delivery, including autonomous sidewalk delivery robots (SADRs). A study by Valdez and Cook [15] examines how autonomous delivery robots are transforming urban life in Milton Keynes. They emphasized the importance of using relational and topological spatial imaginaries to understand AI distributed cognition and non-human agency in cities. According to the study, the implementation of AI and robots involves transversal power dynamics that operate across topological networks. This is where reach and connectivity matter more than fixed territorial boundaries. Essentially, integrating autonomous delivery robots into urban settings demonstrates their potential to transform commercial settings, enabling efficient, scalable, and socially adaptive delivery solutions. Based on these initiatives, this study proposes a compact and cost-effective system for controlling indoor commercial environments, such as restaurants and universities.

Despite these advances, there are still a number of limitations. The majority of robots are unable to adapt in real time, reliably avoid obstacles, and maintain contextual awareness, especially in dynamic or semi-structured environments such as universities [16]. In addition, there are very few systems that are designed in a cohesive manner that consider both technical complexity and user interaction simultaneously. The purpose of this paper is to propose a smart wheel-powered delivery robot that is capable of autonomous navigation [17], real-time obstacle detection [18], and human interaction [13]. A university or hospitality environment can benefit from this system, which recognizes faces, communicates with mobile applications, and executes tasks. A combination of Arduino, ESP32, ESP32-CAM, and Python components is used to accomplish this task. During the course of the study, a reliable, cost-effective robotic platform will be developed to facilitate the delivery of institutional services with enhanced efficiency and satisfaction.

2. Materials and Methods

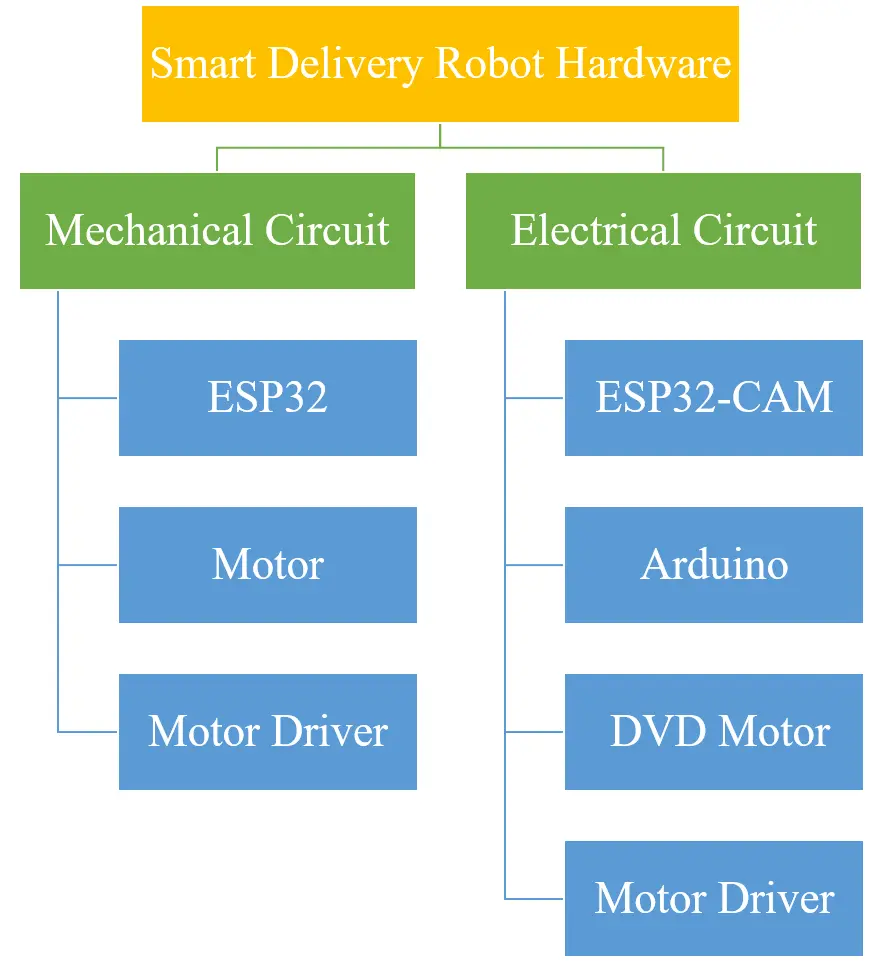

This section provides a comprehensive overview of the design and functionality of the mechanical and electrical components, as well as the hardware and software of the system. The components of the overall system play a crucial role in ensuring the smooth operation and integration of the system. Physically, the system consists of mechanical and electrical components, while its architecture and connectivity can be illustrated by system hardware and block diagrams. As a result of the system software, a system’s intelligence and control are enabled. This allows it to perform efficiently and interact with the environment and its users.

2.1. Mechanical and Electrical Components

As smart devices and high-speed internet connections proliferate, the Internet of Things (IoT) is becoming an increasingly critical technology across a variety of industries [19,20]. Basically, IoT refers to a system of interconnected physical objects, such as sensors, actuators, and other devices with limited processing capabilities. These objects are interconnected through private or public networks [21]. Several of these devices have the capability of being accessed and controlled remotely, so specific functions can be performed. They also communicate data among themselves so that intelligent decisions can be made.

A detailed description of the electronic components used in our system development is presented in this section. Additionally, step-by-step instructions are provided on how to construct and assemble the final hardware prototype.

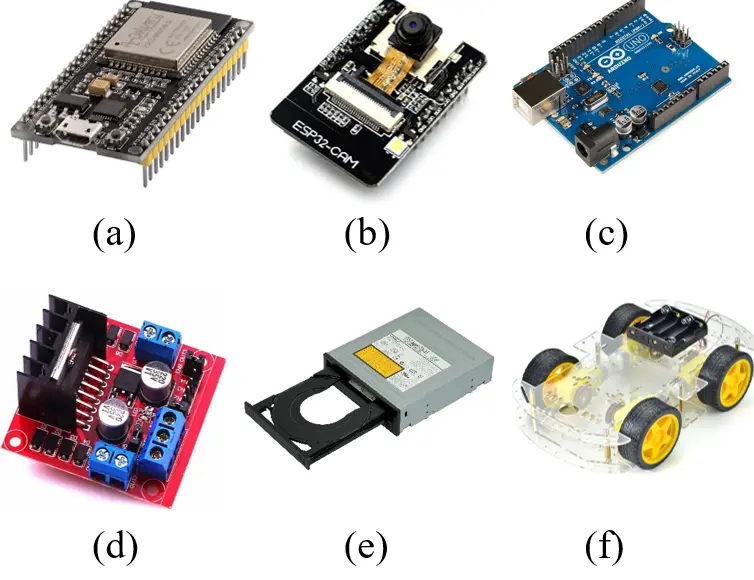

A first component is the ESP32, which is shown in Figure 1a. ESP32 is an open-source, low-cost microcontroller series that integrates Wi-Fi and dual-mode Bluetooth functions [22]. There are a variety of processor options available, including a single-core and dual-core Tensilica Xtensa LX6 processor, a dual-core LX7 processor, and a single-core RISC-V processor. This study uses the ESP32 module as the main controller to control the robot’s movement. Robots are controlled autonomously by a dedicated mobile application that receives navigation commands from the application.

Figure 1. (a) ESP32, (b) ESP32-CAM, (c) Arduino, (d) L298N motor driver, (e) DVD driver, and (f) Four-wheel robot car chassis.

As shown in Figure 1b, there is also an ESP32-CAM component. As an IoT development board, it is designed to facilitate visual input in IoT applications. There is also wireless communication capability built into the ESP32 processor and a 2-megapixel camera built into the OV2640 processor [23]. This work utilized the ESP32-CAM module to detect human faces, which allowed the robot to react appropriately [24] by opening or closing drawers according to its surroundings.

Figure 1c illustrates how the Arduino was used. In recent years, these boards have gained widespread popularity as a family of open-source microcontrollers characterized by their ease of prototyping and development [25]. Due to its compatibility and simplicity, the Arduino Uno model was selected for this project [26]. Through the integration of the Arduino Integrated Development Environment (IDE), this study was able to control the drawer mechanisms whenever human activity was detected through the ESP32-CAM module.

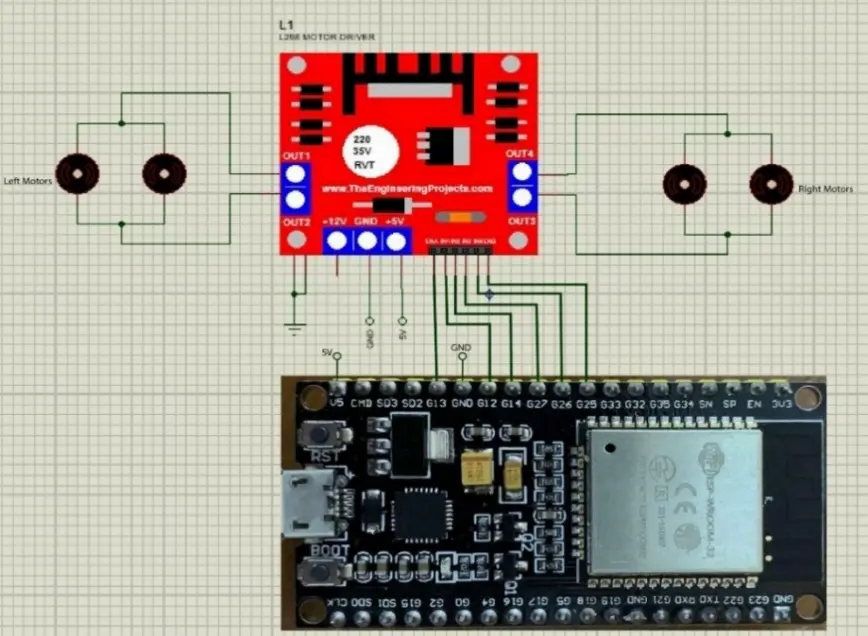

Figure 1d shows how the robot’s movement is controlled by a L298N motor driver. In this case, the driver is a robust, high-current motor controller capable of driving DC and stepper motors [27]. The 5V output is provided by a 78M05 voltage regulator in conjunction with the L298 IC. This component controls four DC motors or two motors with directional and speed control. The L298N driver was connected to two motors, one for propelling the robot’s wheels and the other for controlling the drawer’s movement. Both the ESP32 and Arduino boards provide signals to the motor driver.

A number of supporting components have been integrated into the system along with the main control unit. It was possible to simulate drawer mechanical operation through the use of a repurposed DVD drive mechanism [28]. This offered precise and compact actuation. Figure 1e illustrates this. The robot’s hardware was powered by lithium-ion (Li-ion) batteries, which provided a stable and portable source of power. Figure 1f shows how the whole assembly was mounted on the four-wheel robot car chassis, which served as the structural platform for both electrical and mechanical components. This type of chassis is commonly used in robotics education, sensor-based applications, and IoT-enabled autonomous systems.

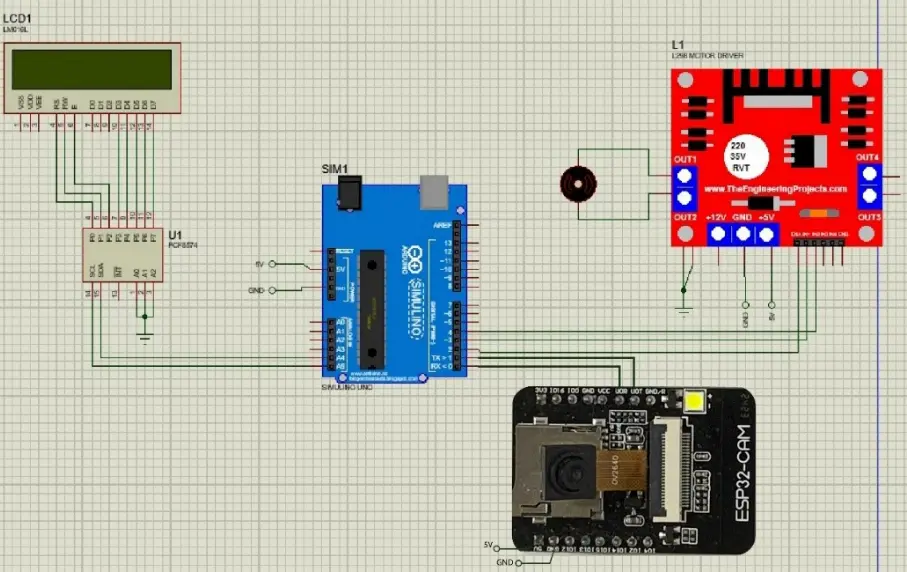

2.2. System Hardware

It was imperative to define a final configuration after analyzing the various connection strategies for robotic systems. For the purposes of minimizing cross-interference risk and enhancing system reliability, mechanical and electrical subsystems were purposely separated in this study. As a result of this modular approach, errors or malfunctions in one domain are not adversely affected by those in another. Figure 2, Figure 3 and Figure 4 show the wiring architecture between components, the final hardware configuration, and the prototype model that has been successfully assembled, as shown in Figure 5.

2.3. Block Diagram

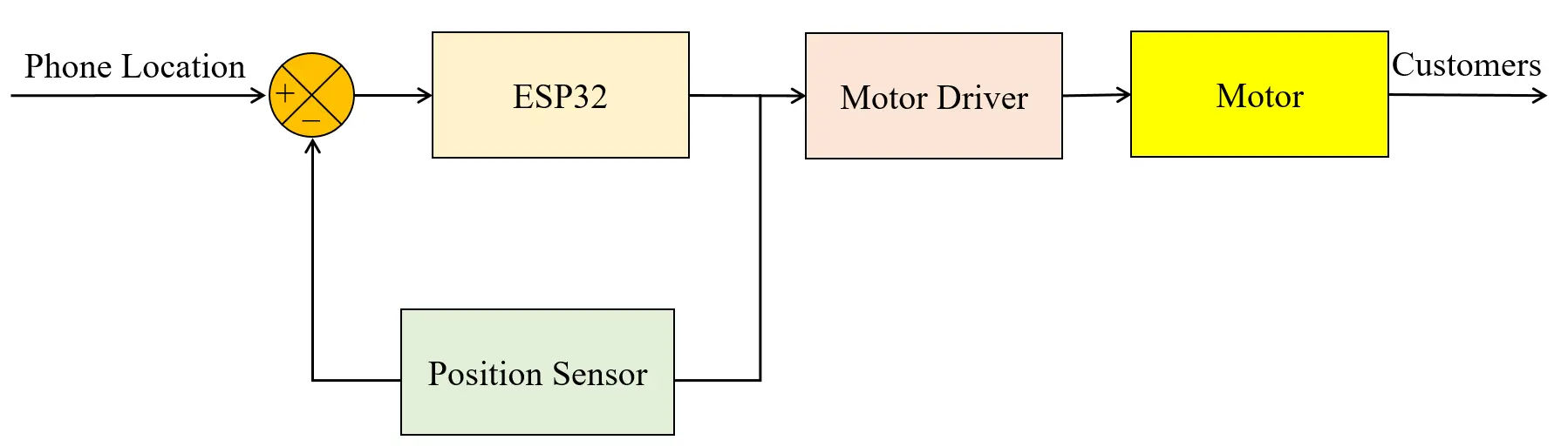

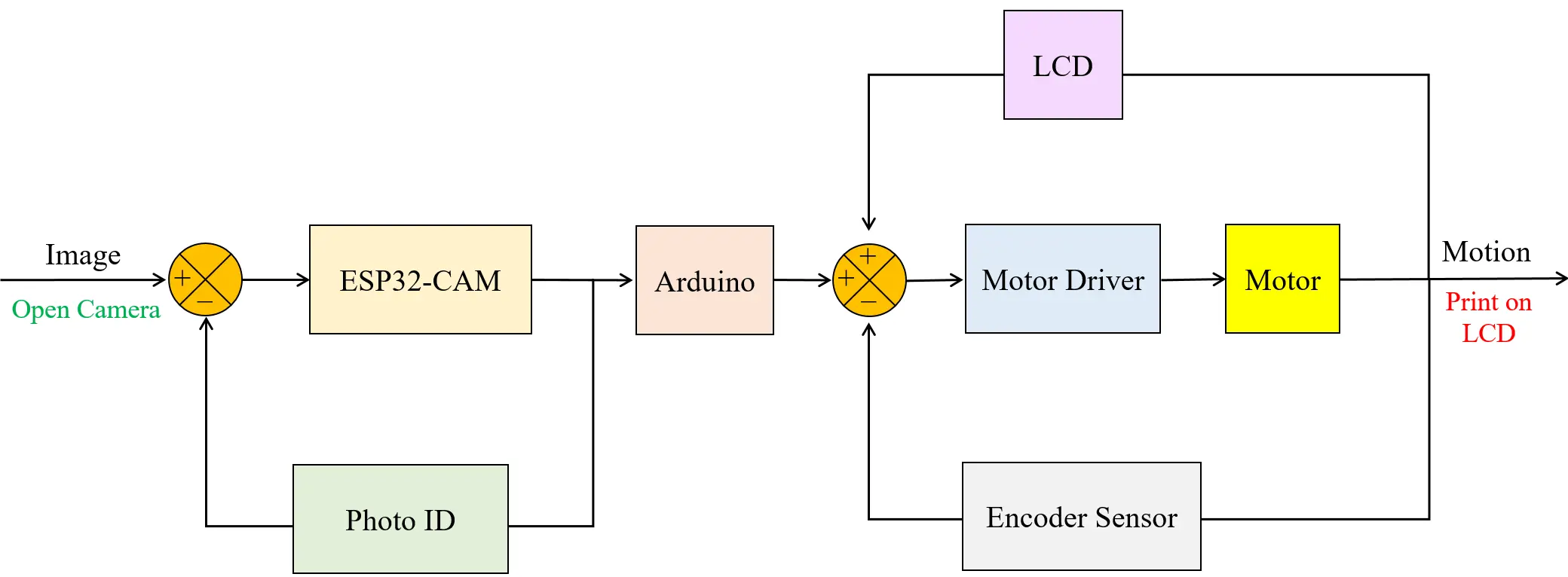

A block diagram is a graphical representation of a system that illustrates the functional relationships between its components. Engineers commonly model system architecture and analyze how different parts of the system interact, behave, and respond under various conditions. In this study, MATLAB (R2022b) was used to construct and simulate block diagrams for both the mechanical and electrical systems of the robot. To ensure clarity and modularity, electrical and mechanical pathways were modeled separately.

The mechanical circuit block diagram represents the control flow from the ESP32 controller to the drive motor. It includes feedback from the position sensor, as shown in Figure 6.

The transfer function describing this interaction is given by:

where $${G}_{c}\left(s\right)$$ represents the ESP32 controller, $${G}_{d}\left(s\right)$$ is the motor driver, and $${G}_{m}\left(s\right)$$ is the motor dynamics.

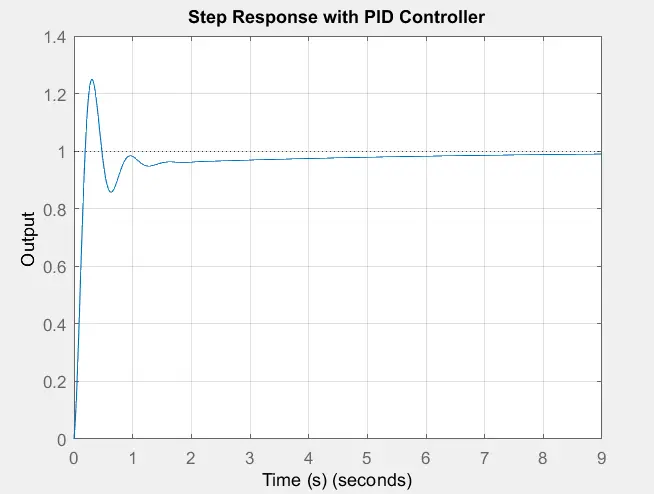

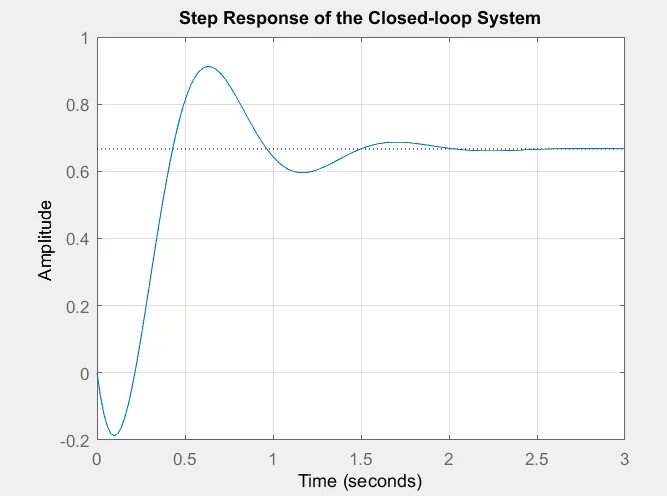

This formulation captures the dynamics of the actuation mechanism, and a PID controller was applied to enhance system stability and performance. The resulting step response, as simulated in MATLAB (R2022b), demonstrates the improved behavior of the system under closed-loop control conditions, as shown in Figure 7.

In the case of the electrical circuit, the block diagram highlights the role of the ESP32-CAM in controlling the drive motor, with the encoder sensor providing real-time feedback as shown in Figure 8. While the system includes an initial logical control loop for activation and deactivation, it was excluded from mathematical modeling due to its binary nature. The analysis focuses on the second loop, which operates based on continuous mathematical relationships.

The transfer function for the electrical system is expressed as:

This model captures the closed-loop dynamics of the electrical control system, and the corresponding step response illustrates its temporal behavior and control effectiveness as shown in Figure 9.

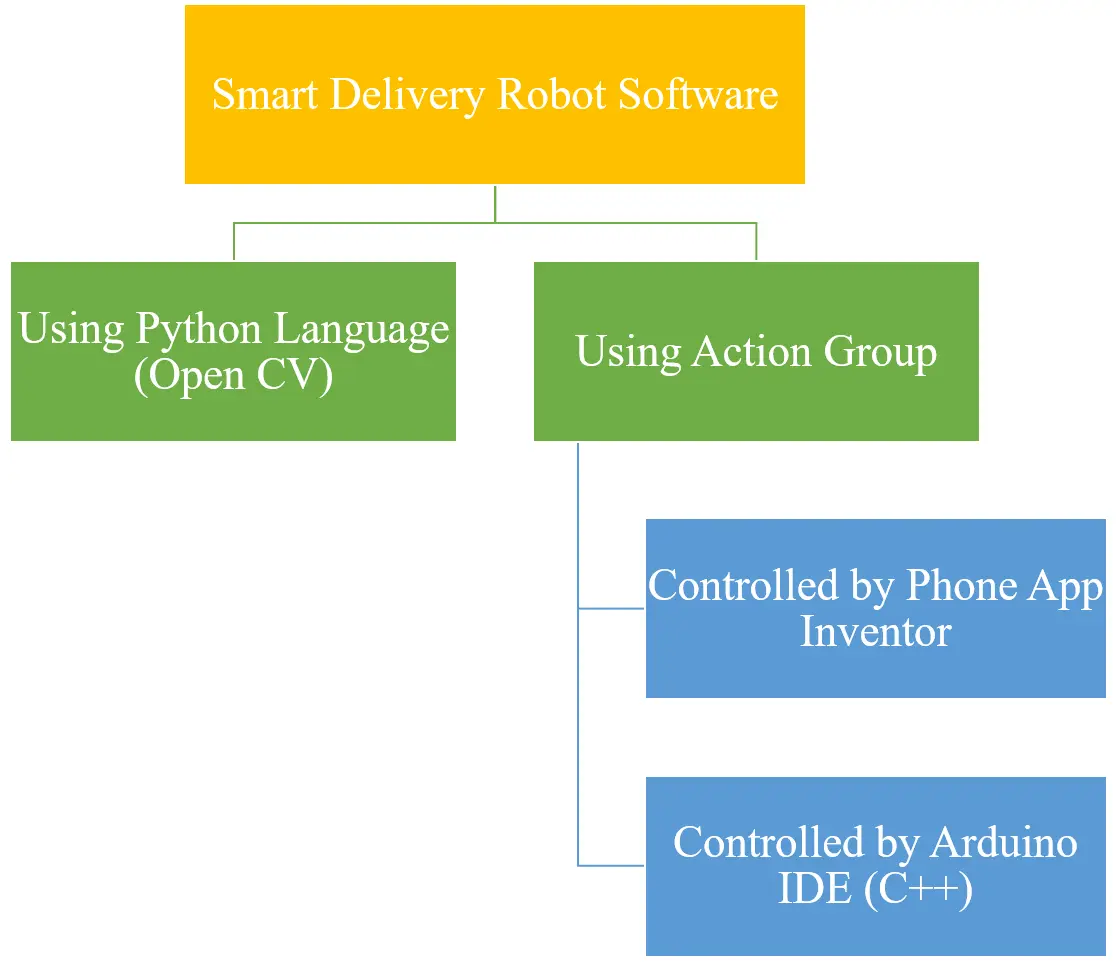

2.4. System Software

In this section, we describe the software components utilized in the research, as illustrated in Figure 10. The software is responsible for controlling the robot’s movements and executing various commands based on programmed logic and user inputs. It integrates different technologies to ensure precise operation and interaction with the environment.

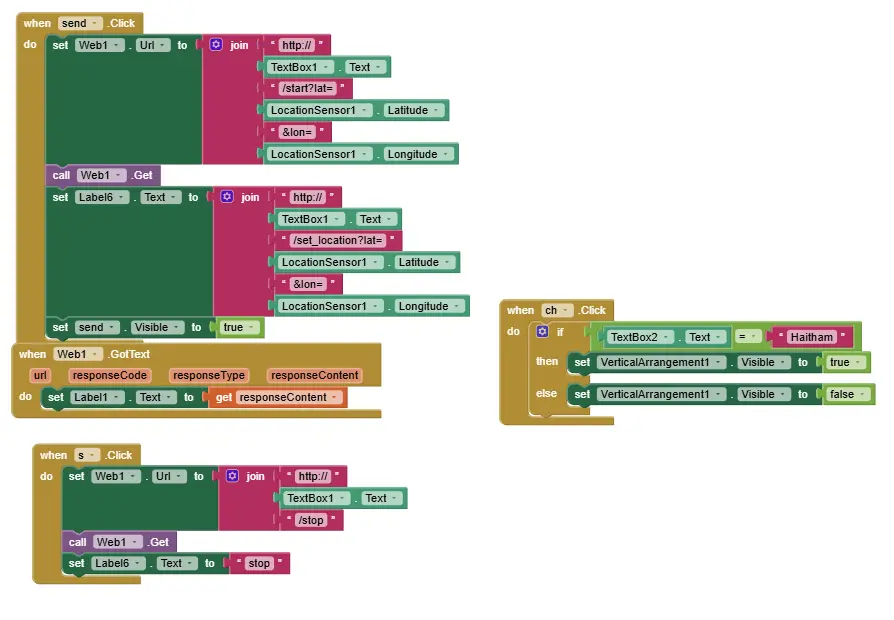

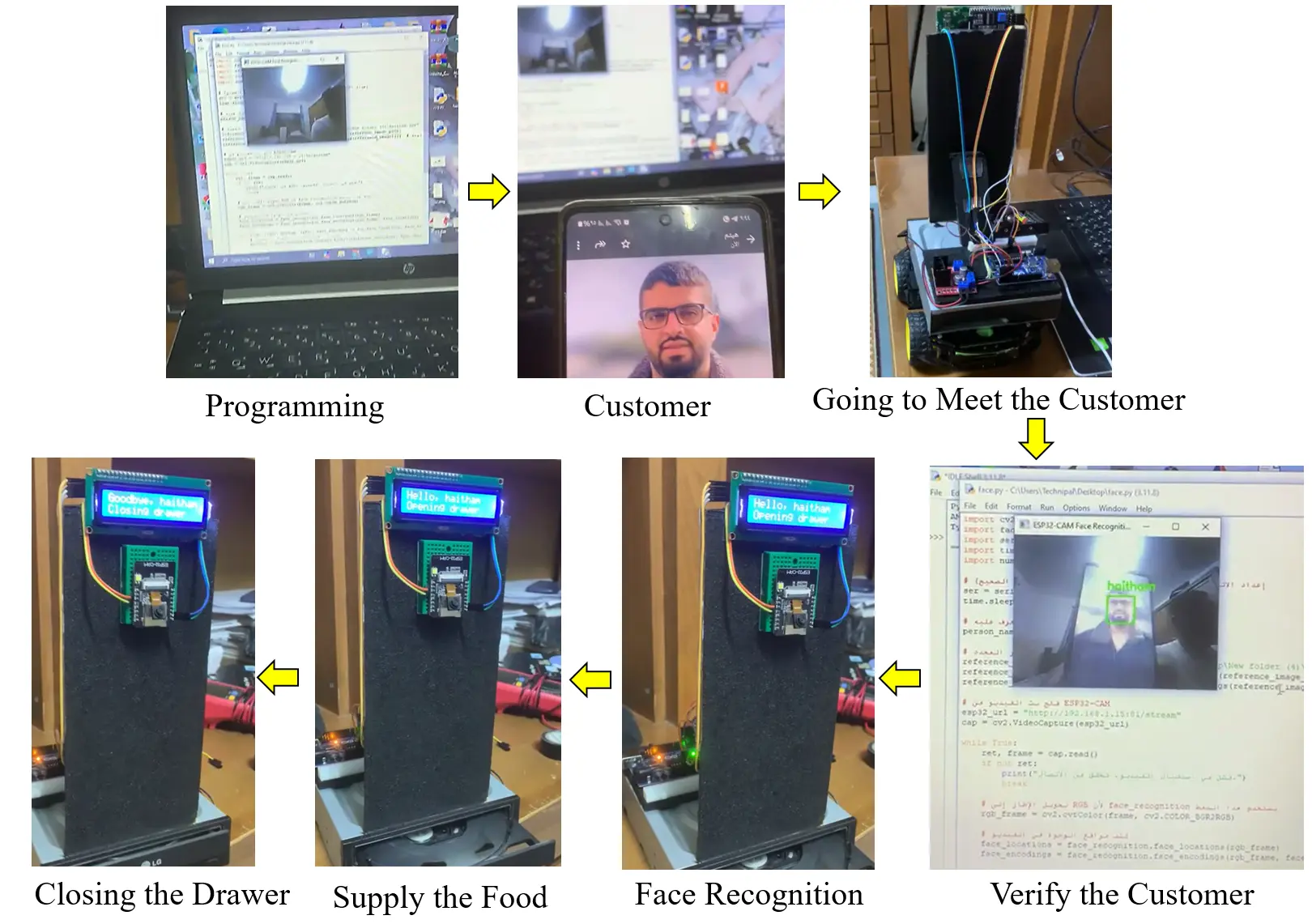

The Python programming language played a central role in the robot’s vision system development [29]. Specifically, the OpenCV library [30] was used for image processing and facial recognition tasks. A set of pre-stored images of authorized individuals was maintained in memory, allowing the robot to match faces captured by the camera to this database. When a match is detected, the system triggers a corresponding command. As part of our study, a picture of a specific individual (Haitham) was inserted into the system. As soon as the ESP32-CAM recognizes this person, it instructs the Arduino to initiate the next step. By controlling a motor, the Arduino opens a drawer at a predetermined location. In response to a ten-second pause after the motor reverses direction, the drawer closes. As a result of this sequence, real-time picture analysis is combined with mechanical actuation successfully.

In addition to the image processing system, an action group was created to provide more comprehensive control over the robot. In order to communicate with the ESP32-CAM, the Arduino IDE was used to connect the device to a Wi-Fi network, as well as obtain its IP address. Python 3.12 and OpenCV 4.7.0 were also used to establish a live video stream from the ESP32-CAM, enabling real-time event handling as well as human recognition. The Arduino Uno receives a signal from the ESP32-CAM when it detects a known person. It prints the person’s name on an LCD screen and turns on a motor to open the drawer. After 10 s, the Arduino commands the motor to reverse, closing the drawer.

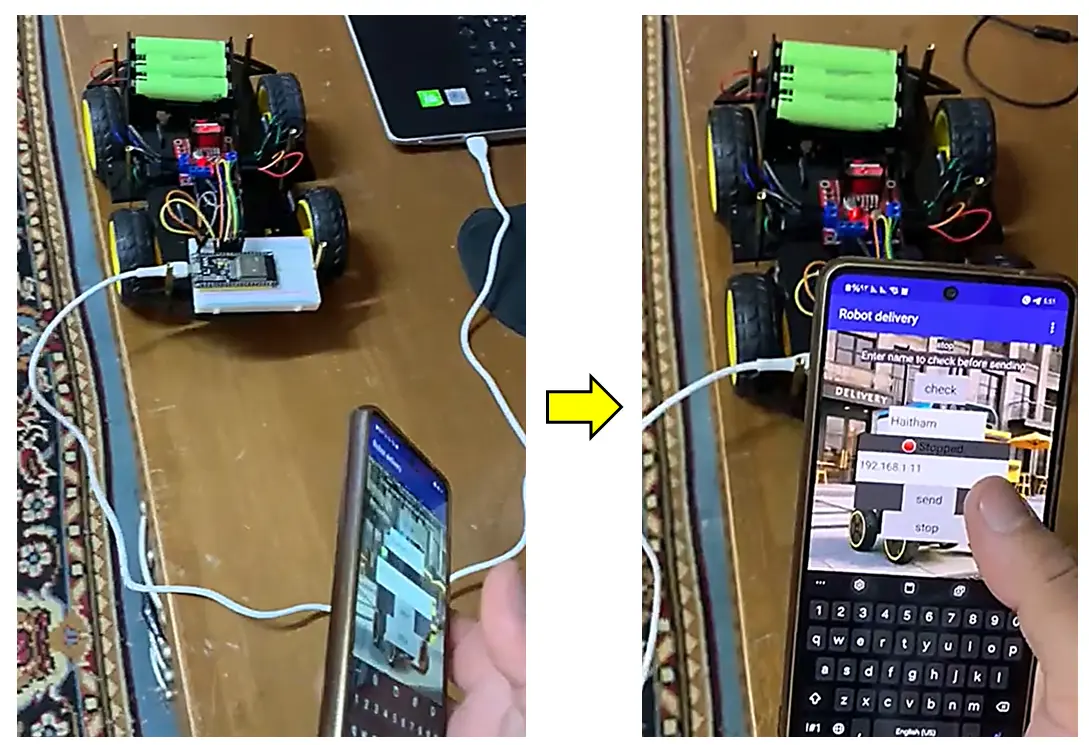

For mechanical movement, the ESP32 module was also programmed using the Arduino IDE to manage the robot’s navigation. It receives directions and commands from a mobile application over Wi-Fi, guiding the robot toward the desired location. However, due to prevailing political conditions, precise location determination through GPS was not possible, limiting the robot’s navigation accuracy.

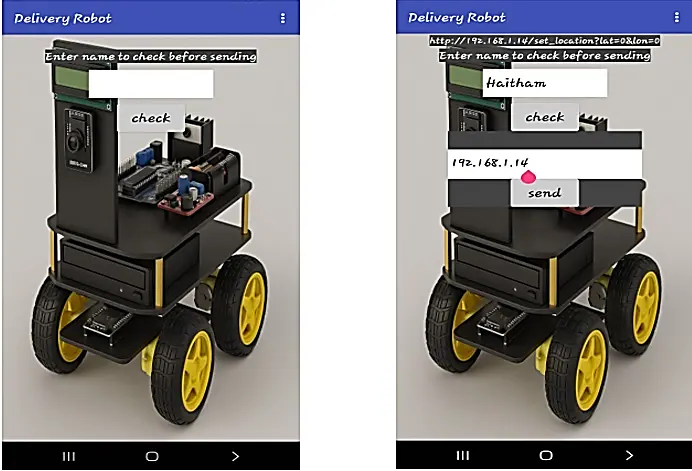

To improve user interaction, a mobile application developed using MIT App Inventor [31] was integrated into the system as depicted in Figure 11. This application allows authenticated users to request the robot’s service at their location. Upon entering their credentials and verification, users can send movement commands to the robot as illustrated in Figure 12. In consideration of GPS inaccuracies resulting from local political challenges, a manual stop button was added to the application. This enabled the user to halt the robot movement when needed.

3. Results and Discussion

This section presents and analyzes the results of the development of a robotic food delivery device. After the robot’s body was assembled and integrated with its electronic components, a series of functional tests was performed to determine its performance under real-world conditions, such as moving to the target person, recognizing him/her via the camera, and delivering food by opening the drawer that contains food. Yet, a number of tests were carried out to determine the effectiveness of the robot’s motion system, as well as its environmental awareness. These tests were conducted using facial recognition cameras. In addition, tests were conducted to evaluate the overall user experience. The operation of robots offers valuable information about their advantages and limitations.

Firstly, the robot’s motors were tested to ensure that they were capable of moving in multiple directions, as illustrated in Figure 13. A substantial amount of navigation flexibility was demonstrated by the robot, which was capable of avoiding obstacles and remaining stable even in congested areas.

Secondly, through the use of a camera that could identify and detect human faces, the robot was able to interact more intelligently and dynamically with its surroundings. Figure 14 illustrates how AI characteristics were incorporated into the robot’s camera system. Thus, it is imperative to note that this capability opens the possibility of future improvements. This requires increased complexity in environmental identification and decision-making algorithms, as well as improved robot functionality.

In order to provide a positive user experience, the robot’s design prioritizes simplicity. The process was made intuitive by using simple components and an unambiguous control interface as a prototype with the available resources. The idea is to mimic the fundamental functions of a waiter, which include taking orders, serving food and beverages, ensuring customer satisfaction, maintaining cleanliness and organization, and, most importantly, ensuring that the food is delivered to the right person through computer vision. This emphasis on usability increases the robot’s usefulness for a wide range of users. As a result, even non-technical individuals will be able to operate it with only a minimal amount of training.

According to performance measures, the robot translated commands into motion in 500 milliseconds on average. A real-time application that requires prompt delivery and rapid response to challenges requires responsive capabilities. Energy efficiency was another notable achievement. A robot can operate for long periods of time on a single charge, depending on the terrain and the load it is facing. The amount and complexity of terrain are factors to consider. Considering its durability, this product can be used in a variety of real-life service environments requiring continuous operation.

In conclusion, the study demonstrated the effectiveness of the strategy from a cost perspective. During the construction process, most components were sourced from old desktop computers, including the DVD driver. This mimics the concept of a drawer containing food. It also had a simple robot chassis with wheels and other available components. This resulted in a robot that is more useful for educational and developmental purposes. Future advancements could be achieved without substantially increasing the costs. All of these factors combined confirmed the study’s main objectives. These objectives include the development of a robotic food delivery system that is versatile, intelligent, affordable, and user-friendly. In light of these encouraging results, it may be possible to explore more extensive applications outside of food delivery in the future. Furthermore, they can operate autonomously. Despite testing our prototype in a controlled environment, future work will involve field testing under dynamic conditions, such as busy restaurant floors or outdoor paths. Yet, aerial drones prove to be a complementary delivery platform suitable for longer distances or difficult terrain. In contrast, ground-based robots, such as the one proposed in this study, are more appropriate for indoor and short-distance deliveries, particularly when human interaction and safety are paramount.

4. Conclusions

In this study, a food delivery robotics system is designed and implemented that mimics the functions of a waiter. As a result, food delivery will be automated in a practical manner. Based on the work conducted, a simple and useful design capable of traversing a variety of terrains successfully and efficiently has been developed. As a result of using lightweight materials and carefully selected motors, the robot’s mobility is enhanced while maintaining its structural integrity. The robot has been equipped with a sophisticated motion coordination algorithm that enables it to move freely and respond appropriately to its surroundings. Furthermore, a user-friendly remote control interface was developed to facilitate robot operation by individuals with minimal training. Besides confirming the initial design goals, this study indicates that further advancements are possible. Artificial intelligence may be necessary to facilitate fully autonomous navigation. A key component of these measures is the optimization of design parameters to increase agility and operational speed. Furthermore, it expands robot applications beyond food delivery to include environmental monitoring, disaster response, and surveillance. The present research not only provides a framework for future service robotics research but also provides notable practical benefits. These benefits include reducing labor demands, improving service consistency, and accelerating delivery processes in busy service settings. As a result, more creativity and real-world applications will be stimulated. To enhance adaptability and safety, future research will include scaling the system to real-world scenarios and integrating advanced AI navigation.

Statement of the Use of Generative AI and AI-Assisted Technologies in the Writing Process

During the preparation of this manuscript, the authors used OpenAI and Wordtune to double check some of the provided content and grammar respectively. After using this tool/service, the authors reviewed and edited the content as needed and take full responsibility for the content of the published article.

Author Contributions

Conceptualization, methodology, data curation, writing—original draft: A.H., H.N., N.S., Z.M. and A.D. Conceptualization, methodology, data curation, writing—original draft, writing—review and editing, supervision: M.A. All authors have read and agreed to the published version of the manuscript.

Ethics Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available on request.

Funding

This research received no external funding.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Amer M, Yasin Z, Alsadi S, Foqha T, Samhan AAA, Giri J. Designing a 4 DoF Manipulator and a Production Line for Lifting and Transporting Pieces. In Proceedings of the 2nd International Conference on Emerging Trends in Engineering and Medical Sciences (ICETEMS), Nagpur, India, 22–23 November 2024. doi:10.1109/ICETEMS64039.2024.10965031. [Google Scholar]

- Leong PY, Ahmad NS. Exploring autonomous load-carrying mobile robots in indoor settings: A comprehensive review. IEEE Access 2024, 12, 131395–131417. doi:10.1109/ACCESS.2024.3435689. [Google Scholar]

- Sun Y, Guan L, Chang Z, Li C, Gao Y. Design of a low-cost indoor navigation system for food delivery robot based on multi-sensor information fusion. Sensors 2019, 19, 4980. doi:10.3390/s19224980. [Google Scholar]

- Abrar MM, Islam R, Shanto MAH. An Autonomous Delivery Robot to Prevent the Spread of Coronavirus in Product Delivery System. In Proceedings of the 11th IEEE Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON), New York, NY, USA, 28–31 October 2020; pp. 461–466. doi:10.1109/UEMCON51285.2020.9298108. [Google Scholar]

- Saravanan D, Perianayaki ERA, Pavithra R, Parthiban R. Barcode system for hotel food order with delivery robot. J. Phys. Conf. Ser. 2021, 1717, 012054. doi:10.1088/1742-6596/1717/1/012054. [Google Scholar]

- Patel A, Sharma P, Randhawa P. MedBuddy: The Medicine Delivery Robot. In Proceedings of the 9th International Conference on Reliability, Infocom Technologies and Optimization (ICRITO), Noida, India, 3–4 September 2021. doi:10.1109/ICRITO51393.2021.9596130. [Google Scholar]

- Abdalrdha ZK, Hussein NA, Jabar RS. The intelligent robot for serving food. Int. J. Sci. Res. Sci. Eng. Technol. 2022, 9, 273–283. doi:10.32628/ijsrset229627. [Google Scholar]

- Singh KJ, Kapoor DS, Abouhawwash M, Al-Amri JF, Mahajan S, Pandit AK. Behavior of delivery robot in human-robot collaborative spaces during navigation. Intell. Autom. Soft Comput. 2023, 35, 795–810. doi:10.32604/iasc.2023.025177. [Google Scholar]

- Hossain M. Autonomous delivery robots: A literature review. IEEE Eng. Manag. Rev. 2023, 51, 77–89. doi:10.1109/EMR.2023.3304848. [Google Scholar]

- Vorina A, Ojsteršek T, Pušnik D. Autonomous delivery robots and their contribution during the pandemic. Horiz.-Int. Sci. J. 2022, 31, 79–86. doi:10.20544/HORIZONS.A.31.2.22.P06. [Google Scholar]

- Qureshi AG, Taniguchi E. A scenario-based feasibility analysis of autonomous robot deliveries. Transp. Res. Procedia 2024, 79, 76–83. doi:10.1016/j.trpro.2024.03.012. [Google Scholar]

- Hossain M. Self-driving robots: A revolution in the local delivery. Calif. Manag. Rev. 2022, 1–6. Available online: https://cmr.berkeley.edu/assets/documents/pdf/2022-04-self-driving-robots-a-revolution-in-the-local-delivery.pdf (accessed on 15 August 2025). [Google Scholar]

- Kim N. Co-op Tile World: A Benchmarking Environment for Human-AI Cooperative Interaction. Doctoral Dissertation, Carnegie Mellon University, Pittsburgh, PA, USA, 2025. [Google Scholar]

- Strubelt H. Sidewalk autonomous delivery robots for last-mile parcel delivery. Adv. Logist. Syst.-Theory Pract. 2024, 18, 30–40. doi:10.32971/als.2024.004. [Google Scholar]

- Valdez M, Cook M. Examining the spatialities of artificial intelligence and robotics in transitions to more sustainable urban mobilities. Nor. Geogr. Tidsskr.-Nor. J. Geogr. 2024, 78, 313–323. doi:10.1080/00291951.2024.2432308. [Google Scholar]

- Waqar M, Bhatti I, Khan AH. Leveraging machine learning algorithms for autonomous robotics in real-time operations. Int. J. Adv. Eng. Technol. Innovat. 2024, 4, 1–24. [Google Scholar]

- Chung W, Iagnemma K. Wheeled robots. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 575–594. [Google Scholar]

- Westerlund M, Sharma A. Sustainable Last-Mile Delivery with Autonomous Robots. In Green Innovations in Supply Chain Management: Case Studies and Applications; Wiley: Hoboken, NJ, USA, 2025; pp. 17–32. [Google Scholar]

- Ali MN, Amer M, Elsisi M. Reliable IoT paradigm with ensemble machine learning for faults diagnosis of power transformers considering adversarial attacks. IEEE Trans. Instrum. Meas. 2023, 72, 3525413. doi:10.1109/TIM.2023.3300444. [Google Scholar]

- Vu VQ, Tran MQ, Amer M, Khatiwada M, Ghoneim SS, Elsisi M. A practical hybrid IoT architecture with deep learning technique for healthcare and security applications. Information 2023, 14, 379. doi:10.3390/info14070379. [Google Scholar]

- Elsisi M, Amer M, Dababat A, Su CL. A comprehensive review of machine learning and IoT solutions for demand side energy management, conservation, and resilient operation. Energy 2023, 281, 128256. doi:10.1016/j.energy.2023.128256. [Google Scholar]

- Cameron N. ESP32 microcontroller. In ESP32 Formats and Communication: Application of Communication Protocols with ESP32 Microcontroller; Apress: Berkeley, CA, USA, 2023; pp. 1–54. [Google Scholar]

- Cameron N. ESP32-CAM Camera. In ESP32 Formats and Communication: Application of Communication Protocols with ESP32 Microcontroller; Apress: Berkeley, CA, USA, 2023; pp. 447–488. [Google Scholar]

- PBV RR, Mandapati VS, Pilli SL, Manojna PL, Chandana TH, Hemalatha V. Home Security with IOT and ESP32 Cam—AI Thinker Module. In Proceedings of the 2024 International Conference on Cognitive Robotics and Intelligent Systems (ICC-ROBINS), Coimbatore, India, 17–19 April 2024; pp. 710–714. [Google Scholar]

- Amer M, Yahya A, Daraghmeh A, Dwaikat R, Nouri B. Design and Development of an Automatic Controlled Planting Machine for Agriculture in Palestine. Univ. J. Control Autom. 2020, 8, 32–39. doi:10.13189/ujca.2020.080202. [Google Scholar]

- Cameron N. Arduino Applied; Apress: New York, NY, USA, 2019. [Google Scholar]

- Peerzada P, Larik WH, Mahar AA. DC motor speed control through Arduino and L298N motor driver using PID controller. Int. J. Electr. Eng. Emerg. Technol. 2021, 4, 21–24. [Google Scholar]

- Sadegh-cheri M. Using the recycled parts of a computer DVD drive for fabrication of a low-cost Arduino-based syringe pump. J. Chem. Educ. 2021, 99, 521–525. doi:10.1021/acs.jchemed.1c00260. [Google Scholar]

- Tudić V, Kralj D, Hoster J, Tropčić T. Application of Computer Vision and Python Scripts as Educational Mechatronic Platforms for Engineering Development in STEM Technologies. arXiv 2021, doi:10.20944/preprints202112.0352.v1. [Google Scholar]

- Howse J. OpenCV Computer Vision with Python; Packt Publishing: Birmingham, UK, 2013; Volume 27. [Google Scholar]

- Top A, Gökbulut M. Android application design with mit app inventor for bluetooth based mobile robot control. Wirel. Pers. Commun. 2022, 126, 1403–1429. doi:10.1007/s11277-022-09797-6. [Google Scholar]