1. Introduction

Cooperative control of multi-agent systems has been studied intensively over the last two decades. This is due to the fact that multi-agent systems have broad applications in diverse areas such as unmanned air vehicles [

1], flocking [

2], formation control [

3] and sensor networks [

4]. Consensus is an important problem in the area of multi-agent systems, and it has been studied comprehensively in the literature. The studies [

5,

6] are well-known references for the review of the most critical problems in the area of multi-agent systems and consensus problems.

In practice, each agent might be subjected to uncertainty or unknown dynamics. Also, many existing works on the leader-follower consensus assumed that the leader input is not zero and is unknown to the followers. In leader-follower multi-agent control algorithms, the term “leader” refers to a designated agent or agents that set the reference trajectory or control commands for the other agents, known as “followers”. The leader’s role typically involves leading behavior, information dissemination, coordination and stability, dynamic response and control algorithms. The leader dictates the desired path, behavior, or objectives that the followers are supposed to follow. This can include position, velocity, or orientation. Also, the leader often shares information about the environment or the desired goals, enabling followers to make informed decisions. On the other hand, the presence of a leader can provide stability to the multi-agent system by ensuring that all agents move cohesively toward a common objective, reducing the risk of inconsistencies or chaotic behavior among the followers. The leader can adapt its behavior in response to external changes (like obstacles or new objectives), guiding the followers to adapt their behavior accordingly. In some applications, the leader may implement specific control strategies to maintain a desired formation or behavior. At the same time, followers adjust based on pre-defined rules or algorithms referencing the leader’s state. Finally, in practical applications, such as robotics, autonomous vehicles, and drone swarms, the concept of a leader-follower framework enhances coordination and cooperative behavior among agents, allowing them to achieve collective tasks efficiently. Depending on the existence of the modeling uncertainties, non-zero leader input and unawareness of followers from the leader’s input, the consensus problem of multi-agent systems for coping with these problems has been studied in diversestudies. The formation problem of a leader-follower multi-agent system in which the agents translate togetherhas been studied in [

7,

8]. In studies [

7,

8], the authors assumed that only the leader has access to the reference velocity. Accordingly, they proposed the decentralized adaptive method for each follower in order to exploit the reference velocity. In study [

9], the robust decentralized adaptive control approach in which the neural network is used has been proposed to solve the consensus problem of the multi-agent systems with uncertainties and external disturbances. In the study [

10], the neural network has been used to estimate the unknown nonlinearity of the first-order agent dynamics. The adaptive estimation approach has been applied to determine the neural network weights in order to compensate for the unknown nonlinearity of agent dynamics. As a major consequence, it has been proven that the errors of synchronization tracking and the estimation of neural network weights are both uniformly ultimately bound. Similar to the study [

10], in the study [

11], the neural network approach has been applied to the high-order dynamic multi-agent system with unknown nonlinear dynamics. In [

12], the authors investigated the distributed adaptive output synchronization of the networked nonlinear SISO systems, which can be transformed into the semi-strict feedback form. In [

12], the authors assumed the existence of parameter uncertainty in the dynamics of each agent. Then, by application of the back-stepping method and the adaptive parameter updating recursion, the output synchronization of the network is analyzed. Authors in a study [

13] worked on the first-order leader-follower consensus in which the unknown dynamics of each follower and the velocity of the leader were described by the linearly parameterized models. In the study [

13], consensus stability and parameter convergence have been proven under adaptive control algorithms. Similar to [

13], in [

14], the authors studied the finite time leader-following consensus of the high-order multi-agent systems with unknown nonlinear dynamics. In [

15], the authors studied a decentralized adaptive tracking control of a second-order leader-follower system with unknown dynamics and relative position measurements. The two introduced research articles have been cited appropriately in the introduction section. Thanks to the information extracted from these research articles, the following text has been added to the introduction section. Consensus algorithms are crucial in many real-world applications, enabling decentralized systems to achieve agreement among distributed nodes. Understanding the size of a network is vital for optimizing resource allocation and ensuring efficient communication. Consensus algorithms can facilitate network size estimation by allowing nodes to exchange information about their local views of the network. Approaches like gossip protocols enable nodes to share their knowledge iteratively, leading to a collective understanding of the total number of nodes in the system. This is particularly useful in mobile ad-hoc networks (MANETs) and Internet of Things (IoT) environments, where network topology can dynamically change. In sensor networks and distributed data systems, data aggregation is essential for reducing the communication overhead and improving data quality. Consensus algorithms help aggregate data collected from various nodes to provide global insights without requiring each node to communicate its data directly to a central authority. Techniques like averaging, voting, and weighted sums allow nodes to collaboratively compute and share summary statistics, such as the average temperature or humidity readings while maintaining data privacy and minimizing bandwidth usage. Unmanned Aerial Vehicles (UAVs) often operate in teams, requiring coordinated behaviors such as formation flying or collaborative search and rescue missions. Consensus algorithms enable UAVs to agree on actions and trajectories in real-time, ensuring that they operate cohesively despite potential communication delays or interruptions. Techniques such as consensus-based flocking algorithms allow each UAV to adjust its path based on the positions and velocities of its neighbors, facilitating smooth and efficient formation changes in dynamic environments. Consensus mechanisms are fundamental to blockchain and distributed ledger technologies, ensuring that all nodes in a network agree on the validity of transactions. Various algorithms, such as Proof of Work (PoW), Proof of Stake (PoS), and Practical Byzantine Fault Tolerance (PBFT), facilitate trustless environments where multiple parties can securely agree on a shared state without relying on a central authority. This application extends to cryptocurrencies, supply chain management, and transparent voting systems. In multi-agent systems, consensus algorithms enable autonomous agents to make decisions collaboratively. Applications range from robotic swarms performing collective tasks, such as exploration and mapping, to smart grid systems managing energy distribution effectively. Agents employ consensus to synchronize their actions, exchange environmental information, and adapt to changes in real-time, which enhances the system’s overall efficiency and resilience.Consensus algorithms are also integral to achieving fault tolerance in distributed systems. By ensuring agreement among healthy nodes despite the presence of faulty nodes or communication failures, these algorithms enable robust operations in systems such as cloud computing and distributed databases. Algorithms like Raft and Paxos are designed to maintain a consistent state among nodes even in the face of partial failures, thereby maintaining system reliability. Consensus algorithms are foundational to many modern technologies, facilitating decentralized networks’ coordination, data integrity, and resilience. Their diverse applications across fields such as sensor networks, UAV control, and blockchain underscore their importance in shaping the future of interconnected systems [

16,

17]. In recent years, the development of resilient control strategies for multi-agent systems has garnered significant attention, particularly in the face of potential cybersecurity threats. One notable contribution in this area is the work by Zhang et. al, [

18]. The study [

18] presents an innovative adaptive neural consensus tracking control strategy, specifically designed to ensure robust coordination among agents while mitigating the effects of actuator attacks. By leveraging jointly connected topologies, the proposed approach enhances the system’s resilience and effectiveness, thereby providing a framework for future research and applications in dynamic and potentially compromised environments [

18].

This paper describes the solution procedure of the distributed adaptive coordination problem for second-order leader-follower multi-agent systems with unknown nonlinear dynamics under the constraint which imposes the availability of only relative position measurements and loss of communication between agents. The authors in a similar study [

15] assumed that the communication between agents is available, while in this study, the aforementioned assumption is not applied during the solution of the problem.

This paper is organized as follows. Section 2 reviews some basic notation and preliminaries of graph theory and formal statement of the problem. In Section 3, the main results of this study are presented. Finally, in Section 4, simulation results are shown to illustrate the proposed methods’ effectiveness. The concluding remarks associated with PDAC and FDAC methods are given in Section 5.

2. Preliminaries and Problem Formulation

Let $$G=\left(V,\ E,\ \bm{A}\right)$$ is a graph of order

N with

V = {1,2,...,

N} which represents node-set, $$E\subseteq V\times V$$ is the set of edges, and $$\bm{A}$$ is the weighted adjacency matrix with nonnegative elements. An edge $$\left(i,j\right)$$ denotes that the node

j has access to the information of the node

i. The graph

G is undirected if $$\left(i,j\right)\in E$$ then $$\left(j,i\right)\in E$$ for any pair of $$\left(i,j\right)$$. In the undirected graph, two nodes

i and

j are neighbors if $$\left(i,j\right)\in E$$. Set of neighbors of node

i is shown by $$N_i=\{j\in V:(j,i)\in E,j\neq i\}$$. A path is a sequence of connected edges in a graph. An undirected graph is connected if between any pair of distinct nodes

i and

j a path exists. In the context of graph theory, a self-loop refers to an edge that connects a node to itself, denoted as $$\left(i,i\right)$$ for some node $$i\in V$$. In many applications, including networked systems, self-loops can be significant as they may represent scenarios where an agent or node processes or reflects its information. Additionally, in the definition of this paper, the expression $$E\subseteq V\times V$$ is defined so as to imply a generally broad perspective on the types of connections that could exist in the graph. While it is technically correct to say that

E can include self-loops, it might be recognized that the presence of self-loops might not always align with the specific applications that are considered. In undirected graphs, self-loops can exist, but they do not affect the concept of adjacency between distinct nodes. They are relevant for scenarios where it is required to allow a node to have a degree of connection with itself. This can be conceptually important in many applications, such as social networks, where individuals may have a relationship with their past actions or decisions. Accordingly, the $$E_{distinct}\triangleq\{(i,j)\in E|i\neq j\}$$ is defined to emphasize the edges connecting distinct nodes more clearly.

In addition, for the follower agents 1 to

N, there exists a leader labeled by 0. Information is exchanged between the leader and the follower agents, which belong to the neighbors of the leader. Then, the graph $$\bar{G}=\left(\bar{V},\ \bar{E},\ \bar{\bm{A}}\right)$$ with node set $$\bar{V}=V\cup$${0} and edge set $$\bar{E}=\bar{V}\times\bar{V}$$, represents the communication topology between the leader and the followers. The communication graph between the leader and the followers is assumed to be unidirectional. A network topology is assumed to be defined by a graph where nodes represent agents and edges represent communication links. While the leader has direct links to certain followers, non-directly linked agents can still receive information through a networked relay system. Each follower agent typically communicates within it communication range with its immediate neighbors. In situations where an agent does not have a direct link to the leader, it can relay messages. This means that the follower can pass on the leader’s commands or state information through a communication chain (

i.e., from the leader to its direct neighbors and further down the line). To ensure that all agents, including those without direct lines to the leader, can achieve consensus, consensus protocols allow agents to update their states based on their information and the information received from neighboring agents. For instance, at each time step, a follower updates its state by considering the state of its neighbors, thus indirectly incorporating the leader’s influence. The message propagation process explicitly details how the leader’s state or directives are broadcasted throughout the network. This may involve strategies such as flooding (where messages are rapidly disseminated) or controlled dissemination based on a time or distance metric. The implications of communication delays, packet loss, and the potential need for robust communication protocols (such as acknowledgments or redundancy) to ensure that critical information from the leader reaches all agents effectively might be further discussed.

Let the adjacency matrix $$\bar{\bm{A}}=\left[a_{ij}\right]\in\mathcal{R}^{\left(N+1\right)\times(N+1)}$$ is such that $$a_{ij}>0$$ if $$\left(j,i\right)\in\bar{E}$$ and $$a_{ij}=0$$, otherwise. It is assumed that $$a_{ii}=0$$ for all $$i\in\bar{V}$$ and $$a_{0j}=0$$ for all $$j\in V$$. The Laplacian matrix $$\bm{L}=\left[l_{ij}\right]\in\mathcal{R}^{N\times N}$$ is defined as $$l_{ii}=\sum_{j=1}^{N}a_{ij}$$ and $$l_{ij}=-a_{ij}$$ for $$j\neq i$$. Finally, the matrix $$\bm{H}$$ is defined as $$\bm{H}=\bm{L}+\bm{diag}(a_{10},\ \ldots,\ a_{N0})$$.

Lemma 1. (Ni and Cheng [

19]):

(i) The matrix $$\bm{H}$$ has nonnegative eigenvalues.

(ii) The matrix $$\bm{H}$$ is positive definite if and only if the graph $$\bar{G}$$ is connected.

Let $$\lambda_1,\ \lambda_2,\ \ldots,\ \lambda_N$$ are the eigenvalues of $$\bm{H}$$ and $$\lambda_{min}(\bm{H})$$ denotes the smallest eigenvalue of $$\bm{H}$$. The Lemma 1 implies that $$\lambda_{min}\left(\bm{H}\right)>0$$ if and only if the graph $$\bar{G}$$ is connected.

Lemma 2. (Boyd

et al. [

19]): Let $$\bm{S}$$ be a symmetric real matrix. Suppose that $$\bm{S}$$ partitioned as $$\bm{S}=\left[\begin{matrix}\bm{S}_{11}&\bm{S}_{12}\\\bm{S}_{12}^T&\bm{S}_{22}\\\end{matrix}\right]$$ then $$\bm{S}\succ0$$ if and only if

(i) $$\bm{S}_{11}\succ0$$ and $$\bm{S}_{22}-\bm{S}_{12}^T\bm{S}_{11}^{-1}\bm{S}_{12}\succ0$$

(ii) $$\bm{S}_{22}\succ0$$ and $$\bm{S}_{11}-\bm{S}_{12}\bm{S}_{22}^{-1}\bm{S}_{12}^T\succ0$$

Consider a group of agents which consists of

N followers and a leader. The second-order dynamics of the follower agents is given by

where $$x_i\in\mathcal{R}$$, $$v_i\in\mathcal{R}$$, $$u_i\in\mathcal{R}$$ and $$f_i\in\mathcal{R}$$ are the position, velocity, control input and unknown time-varying dynamics of the

ith follower, respectively.

Also, the dynamic of the leader is governed by the following equation

where $$x_0\in\mathcal{R}$$, $$v_0\in\mathcal{R}$$ and $$u_0\in\mathcal{R}$$ are the position, velocity and acceleration of the leader, respectively.

The tracking errors of position and velocity of the

ith agent are defined as $${\widetilde{x}}_i\left(t\right)=x_i\left(t\right)-x_0(t)$$ and $${\widetilde{v}}_i\left(t\right)=v_i\left(t\right)-v_0(t)$$, respectively. Also, the relative position measurement and relative velocity measurement are defined as

where $$a_{ij}$$ is the (

i,

j) entry of the adjacency matrix $$\bar{\bm{A}}$$.

Definition 1. Second order leader-follower consensus of multi-agent system described by (1) and (2) is said to be achieved if

3. Solution of the Communication-Loss Problem

This section deals with the solution procedure for the communication-loss problem in the network of followers and leaders. Based on the techniques of classical adaptive control ([

15,

16]), the unknown acceleration of the leader, $$u_0$$, and the unknown dynamics of each agent, $$f_i(t)$$, can be parameterized approximately as a linear regression form

where $$\bm{\phi}_0\in\mathcal{R}^{m_0}$$ and $$\bm{\phi}_i\in\mathcal{R}^{m_i}$$ are the basis functions associated with the parameters $$\bm{\theta}_0$$ and $$\bm{\theta}_i$$. The numbers $$m_0$$ and $$m_i$$ are the dimension of the basis functions associated with the parameter vectors $$\bm{\theta}_0$$ and $$\bm{\theta}_i$$, respectively. The arrays $$\bm{\theta}_0\in\mathcal{R}^{m_0}$$ and $$\bm{\theta}_i\in\mathcal{R}^{m_i}$$ are time-invariant parameters of the leader’s acceleration and agents’ uncertainties which are unknown to each follower.

For compensating the terms $$u_0$$ and $$f_i$$, the parameter arrays $$\bm{\theta}_0$$ and $$\bm{\theta}_i$$ should be estimated by each follower. Assume that the

ith follower estimates the $$\bm{\theta}_0$$ and $$\bm{\theta}_i$$ by $${\hat{\bm{\theta}}}_{0i}$$ and $${\hat{\bm{\theta}}}_i$$, respectively; and also $$u_0$$ and $$f_i$$ by $${\hat{u}}_{0i}$$ and $${\hat{f}}_i$$ which so that

When the velocity measurement of agents is not available, a filter for each follower agent can be implemented according to the procedure of study 22,

where $$w_i\in\mathcal{R}$$ and $$\vartheta_i\in\mathcal{R}$$ are the filter output and auxiliary filter vector, respectively. The coefficients

a and

b are positive constant quantities, which are design parameters. By eliminating the auxiliary filter vector, $$\vartheta_i$$, (7) can be written as

Now, the following adaptive control input is introduced for system (1) as

where $$\alpha$$ is a positive constant parameter. In (9), the term $$-\alpha\left(w_i+e_{xi}\right)$$ is stabilizing part. The adaptive terms $$\bm{\phi}_i^T\left(t\right){\hat{\bm{\theta}}}_i$$, $$\bm{\phi}_0^T\left(t\right){\hat{\bm{\theta}}}_{0i}$$ compensate the unknown dynamics of each agent and leader acceleration, respectively.

Before giving the adaptive laws for estimated parameter arrays $${\hat{\bm{\theta}}}_{0i}$$, $${\hat{\bm{\theta}}}_i$$, primitively two auxiliary arrays are defined as

Design of the adaptive laws for $${\hat{\bm{\theta}}}_{0i}$$, $${\hat{\bm{\theta}}}_i$$ is equivalent to design of adaptive laws for auxiliary arrays $$\bm{\psi}_{0i}$$, $$\bm{\psi}_i$$ which are given as

where $$\beta$$ and $$\gamma$$ are positive constant parameters. By substituting (10) in (11), the following result appears

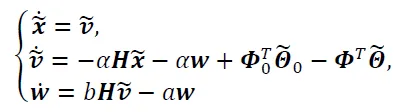

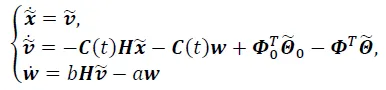

By application of the (7) and (9) to the system (1) and (2), the closed-loop system can be written as

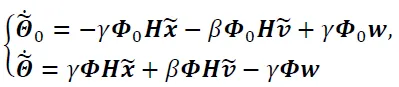

With the adaptive laws

where $$\widetilde{\bm{x}}=col\left\{{\widetilde{x}}_1,\ \ldots,\ {\widetilde{x}}_N\right\}$$, $$\widetilde{\bm{v}}=col\left\{{\widetilde{v}}_1,\ \ldots,\ {\widetilde{v}}_N\right\}$$, $$\bm{w}=col\left\{w_1,\ \ldots,\ w_N\right\}$$, $${\widetilde{\bm{\large\varTheta}}}_0=col\left\{{\widetilde{\bm{\theta}}}_{01},\ \ldots,\ {\widetilde{\bm{\theta}}}_{0N}\right\}$$, $$\widetilde{\bm{\large\varTheta}}=col\left\{{\widetilde{\bm{\theta}}}_1,\ \ldots,\ {\widetilde{\bm{\theta}}}_N\right\}$$, $$\bm{\large\varPhi}_0=\bm{I}_N\otimes\bm{\phi}_0(t)$$, $$\bm{\large\varPhi}=\bm{diag}\left\{\bm{\phi}_1,\ldots,\ \bm{\phi}_N\right\}$$ and also $${\widetilde{\bm{\theta}}}_{0i}={\hat{\bm{\theta}}}_{0i}-\bm{\theta}_0$$, $${\widetilde{\bm{\theta}}}_i={\hat{\bm{\theta}}}_i-\bm{\theta}_i$$.

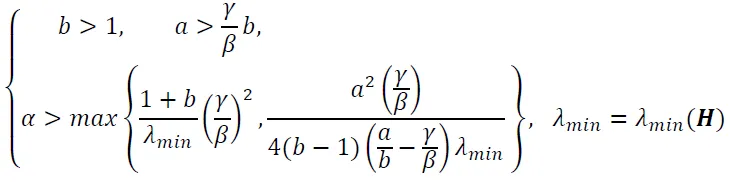

The following theorem presents a sufficient condition for the stability of the closed-loop system (13) and (14).

Theorem 1. (PDAC Method): It is supposed that in the leader-following system (1) and (2),

G is undirected in $$\bar{G}$$ the leader has directed paths to all followers, and the basic functions $$\bm{\phi}_0$$ and $$\bm{\phi}_i$$ are uniformly bounded. Then, the leader-following consensus under (9) and (11) is achieved if the following conditions are satisfied

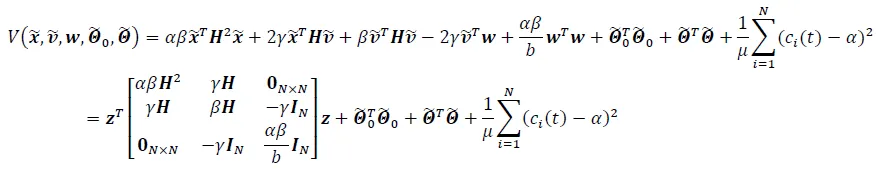

Proof. If $$\bm{z}=\begin{bmatrix}\widetilde{\bm{x}}^T&\widetilde{\bm{v}}^T&\boldsymbol{\bm{w}}^T\end{bmatrix}^T$$, the following Lyapunov function can be introduced

The function

V is a positive definite function if and only if the matrix $$\bm{P}$$ is a positive definite matrix. By application of Lemma 2, the matrix $$\bm{P}$$ is a positive definite matrix if and only if $$\left[\begin{matrix}\alpha\beta\bm{H}^2&\gamma\bm{H}\\\gamma\bm{H}&\beta\bm{H}\\\end{matrix}\right]-\frac{b}{\alpha\beta}\left[\begin{matrix}\mathbf{0}_{N\times N}\\-\gamma\bm{I}_N\\\end{matrix}\right]\left[\begin{matrix}\mathbf{0}_{N\times N}&-\gamma\bm{I}_N\\\end{matrix}\right]\succ0$$ and $$\frac{\alpha\beta}{b}\bm{I}_N\succ0$$. And also, $$\left[\begin{matrix}\alpha\beta\bm{H}^2&\gamma\bm{H}\\\gamma\bm{H}&\beta\bm{H}\\\end{matrix}\right]-\frac{b}{\alpha\beta}\left[\begin{matrix}\mathbf{0}_{N\times N}\\-\gamma\bm{I}_N\\\end{matrix}\right]\left[\begin{matrix}\mathbf{0}_{N\times N}&-\gamma\bm{I}_N\\\end{matrix}\right]=\left[\begin{matrix}\alpha\beta\bm{H}^2&\gamma\bm{H}\\\gamma\bm{H}&\beta\bm{H}-\frac{b}{\alpha\beta}\gamma^2\bm{I}_N\\\end{matrix}\right]\succ0$$ if and only if $$\beta\bm{H}-\frac{\left(1+b\right)}{\alpha\beta}\gamma^2\bm{I}_N\succ0$$ and $$\alpha\beta\bm{H}^2\succ0$$. Since the graph

G is undirected and in $$\bar{G}$$ the leader has directed paths to all followers; we can get from Lemma 1 that the matrix $$\bm{H}$$ is a symmetric positive definite matrix and also $$\alpha,\ \beta,\ b>0$$, which leads to $$\frac{\alpha\beta}{b}\bm{I}_N\succ0,\ \alpha\beta\bm{H}^2\succ0$$. Also, from Lemma 1, it is known that $$\bm{H}\succ\lambda_{min}\bm{I}_N$$; then, if $$\alpha>\frac{1+b}{\lambda_{min}}\left(\frac{\gamma}{\beta}\right)^2$$ it is concluded that $$\beta\bm{H}-\frac{\left(1+b\right)}{\alpha\beta}\gamma^2\bm{I}_N\succ0$$, which consequently results $$\bm{P}\succ0$$ and

V is a positive definite function.

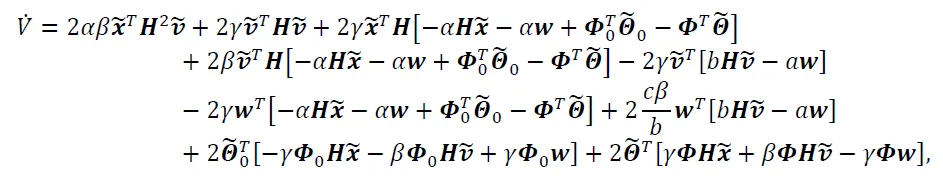

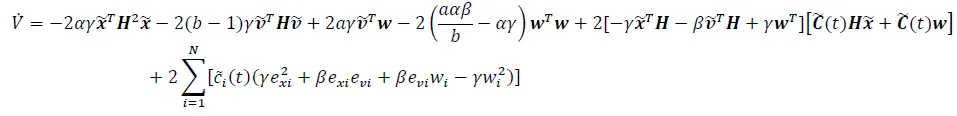

Now, by time differentiation of V along (13), (14),

By simplifying (17) and giving a pretty form to it,

The matrix $$\bm{Q}$$ is a positive definite matrix if and only if $$\begin{bmatrix}2(b-1)\gamma\boldsymbol{H}&-a\gamma\boldsymbol{I}_N\\-a\gamma\boldsymbol{I}_N&2\left(\frac{a\alpha\beta}{b}-\alpha\gamma\right)\boldsymbol{I}_N\end{bmatrix}\succ0$$ and $$2\alpha\gamma\bm{H}^2\succ0$$. By application of the Lemma 2, $$\begin{bmatrix}2(b-1)\gamma\boldsymbol{H}&-a\gamma\boldsymbol{I}_N\\-a\gamma\boldsymbol{I}_N&2\left(\frac{a\alpha\beta}b-\alpha\gamma\right)\boldsymbol{I}_N\end{bmatrix}\succ0$$ if and only if $$2\left(b-1\right)\gamma\bm{H}\succ0$$ and $$2\left(b-1\right)\gamma\bm{H}-\frac{a^2\gamma^2}{2\alpha\beta\left(\frac{a}{b}-\frac{\gamma}{\beta}\right)}\bm{I}_N\succ0$$. If condition (15) is satisfied, then the results $$2\left(b-1\right)\gamma\bm{H}-\frac{a^2\gamma^2}{2\alpha\beta\left(\frac{a}{b}-\frac{\gamma}{\beta}\right)}\bm{I}_N\succ0$$, $$2\left(b-1\right)\gamma\bm{H}\succ0$$ are concluded. Hence, the matrix $$\bm{Q}$$ is a positive definite matrix and $$\dot{V}$$ is negative semi-definite.

The function

V is a positive quantity, so it is lower bound. Also $$\dot{V}$$ is negative semi-definite. Since $$\dot{V}$$ is negative semi-definite, it is concluded that $$\bm{z}$$, $${\widetilde{\bm{\varTheta}}}_0$$, $$\widetilde{\bm{\varTheta}}$$ are bounded. Thus, from (13), (14) and uniformly boundedness condition of the basis functions $$\bm{\phi}_0$$ and $$\bm{\phi}_i$$, $$\dot{\bm{z}}$$ is bounded. By taking the derivative of $$\dot{V}$$ in (18) along (13), (14), the $$\ddot{V}$$ is assessed to be bounded. Now, by resorting to the Barbalat’s Lemma [

20] that $$\lim\limits_{t\to\infty}\dot{V}(t)=0$$, the consequence $$\lim\limits_{t\to\infty}\bm{z}(t)=0$$ is achieved. □

Specific Example 1. Consider a multi-agent system with one leader and follower. Assume that $$u_0(t)=1.0$$ and $$f_1(t)=1.0$$. Also assume that $$a_{10}=1.0$$ then

By selecting the basis function $$\phi_0=1.0$$ and $$\phi_1=1.0$$, Adaptive laws based on Equation (11) can be written as given

and from Equation (10), $${\hat{\theta}}_{01}$$ and $${\hat{\theta}}_1$$ can be written as

Differentiating from the above equation gives the following set of differential equations

Finally, the closed-loop system is given as follows

By defining $$\omega={\widetilde{\theta}}_{01}-{\widetilde{\theta}}_1$$ and selecting $$a=1,\ b=2,\ \alpha=2,\ \beta=3,\ \gamma=1$$ such that the (15) satisfied, the (22) and (23) can be written as

The stability of the system (24) depends on the eigenvalues of the matrix $$\mathbf{\Xi}$$. The eigenvalues of the matrix $$\mathbf{\Xi}$$ are $$\lambda=-0.2445\pm3.3938i,\ -0.2555\pm0.3278i$$ which implies the exponential stability of (24).

Remark 1. To satisfy the condition on control gain $$\alpha$$ in Theorem 1, centralized information depending on the communication graph must be known in advance for the control gain design. Due to this reason, the control algorithm (9), (11) proposed to the multi-agent system (1), (2) is not distributed. So, in order to design a fully distributed consensus algorithm, the adaptive gain method introduced in [

21,

22] is applied. The question regarding the feasibility of determining the necessary eigenvalues in a distributed manner is pertinent and highlights the challenges inherent in decentralized control systems. In multi-agent systems, the eigenvalues of the system’s coupling matrix (often derived from the graph that represents the connectivity of agents) play a critical role in assessing system stability, convergence properties, and performance. These values may relate to the system’s ability to achieve consensus, formation, and control objectives. While techniques are available for distributed computation of eigenvalues, such as consensus algorithms or distributed iterative methods (e.g., power iteration and methods based on local coordination), these approaches can be computationally intensive. They may require multiple iterations to converge to an accurate value. The feasibility of such methods largely depends on the graph topology and the network’s connectivity. In many practical scenarios, having prior knowledge of certain eigenvalues (e.g., the largest eigenvalue correlated with the system’s stability) can significantly simplify the design and implementation of control strategies. When these eigenvalues are known, controllers can be designed with specific bandwidth and robustness properties in mind. However, in the absence of such knowledge, algorithms that enable distributed estimation of eigenvalues can be employed. Additionally, approximate methods for estimating eigenvalues or using local information to infer global properties can sometimes be effective. Distributed algorithms can leverage neighboring agents’ relative positions and velocities to asymptotically converge on the desired values. While it is certainly possible to determine eigenvalues in a distributed manner, the effectiveness and efficiency of such an approach can vary based on specific system dynamics and network topology. Therefore, if prior knowledge of these eigenvalues is available, it can greatly enhance the control system’s design and performance.

According to Remark 1, an adaptive control algorithm that is fully distributed is introduced. Consider the following control law

where, $$c_i(t)$$ is the adaptive gain.

Before giving adaptive law for $$c_i(t)$$, an auxiliary parameter is defined as

where $$\mu$$ is a positive constant parameter. The adaptation law for $$\eta_i$$ is given as

The parameters

a,

b, $$\beta$$ and $$\gamma$$ were introduced previously by (7) and (11). Similar to (12), by substituting (26) into (27), the following result is obtained

By application of (19) to system (1), (2), the feedback form of the system is obtained as

where $$\bm{C}(t)=diag\{c_1(t),...,c_N(t)\}$$.

Now, the second theorem of this study is presented.

Theorem 2. (FDAC Method): It is supposed that in the leader-following system (1) and (2),

G is undirected in $$\bar{G}$$ the leader has directed paths to all followers, and the basis functions $$\bm{\phi}_0$$ and $$\bm{\phi}_i$$ are uniformly bounded. Then, the leader-following consensus under (11), (25) and (27) is achieved if the following conditions are satisfied

Proof. If $$\bm{z}=\begin{bmatrix}\widetilde{\boldsymbol{x}}^T&\widetilde{\boldsymbol{v}}^T&\boldsymbol{w}^T\end{bmatrix}^T$$, the following Lyapunov function can be introduced

where, in the proposed Lyapunov function, the parameter $$\alpha$$ is constant which should be determined. The parameter $$\alpha$$ is selected such that $$\alpha>max\left\{\frac{1+b}{\lambda_{min}}\left(\frac{\gamma}{\beta}\right)^2,\frac{a^2\left(\frac{\gamma}{\beta}\right)}{4(b-1)\left(\frac{a}{b}-\frac{\gamma}{\beta}\right)\lambda_{min}}\ \right\}$$. Similar to Theorem 1, it is shown that the function

V is positive definite.

By time differentiation of

V along (14), (28) and (29)

By defining $${\widetilde{c}}_i\left(t\right)=c_i\left(t\right)-\alpha$$ and also $$\tilde{\bm{C}}(t)=\bm{diag}\{\tilde{c}_1(t),...,\tilde{c}_N(t)\}$$, and substituting into the (32)

If $$\bm{e}_x=\bm{col}\left\{e_{x1},\ \ldots,\ e_{xN}\right\}$$ and $$\bm{e}_v=\bm{col}\left\{e_{v1},\ \ldots,\ e_{vN}\right\}$$, then by using the definition of matrix $$\bm{H}$$, $$\bm{e}_x=\bm{H}\widetilde{\bm{x}}$$ and $$\bm{e}_v=\bm{H}\widetilde{\bm{v}}$$ are obtained. Hence, the term $$\left[-\gamma{\widetilde{\bm{x}}}^T\bm{H}-\beta{\widetilde{\bm{v}}}^T\bm{H}+\gamma\bm{w}^T\right]\left[\widetilde{\bm{C}}\left(t\right)\bm{H}\widetilde{\bm{x}}+\widetilde{\bm{C}}(t)\bm{w}\right]$$ can be written in a summation form which is presented as

Consequently

Similar to procedure of the Theorem 1, the following results can be obtained for the Theorem 2 according to which, $$\lim\limits_{t\to\infty}\mathbf{\bm{z}}(t)=0$$. □

To clarify, both conditions specified in Theorem 2 are necessary for ensuring the positive definiteness of the matrix in question. Each condition addresses critical aspects of the system’s dynamics and interaction structure, contributing to the overall stability and convergence towards consensus.

Condition 1 ensures that the communication topology allows for appropriate interactions among the agents, while Condition 2 guarantees that the leader can influence all followers effectively. The interplay between these conditions is essential for deriving the positive definiteness of the related matrix, which is a key step in proving the consensus result. If either condition were to be relaxed or violated, the positive definiteness may not hold, potentially jeopardizing the proof of consensus.

Finally, the proposed algorithms can be summarized. So, in the PDAC method, the controller is given from a set of equations of (36). Also the block diagram of this method is shown in .

. The feedback representation of the PDAC method.

Similar to PDAC method, the controller input in FDAC method is given from a set of equations of (37). Block diagram of this method is shown in .

. The feedback representation of the FDAC method.

4. Simulation Results and Discussions

4.1. Simulation Results

This section provides numerical simulations to show the effectiveness of proposed adaptive control algorithms. For numerical simulations, we consider 4 follower agents and one leader with the communication topology shown in and the matrix $$\bm{H}$$ described by (38).

. Communication topology of the illustrating example.

The examples included in this paper are intended to demonstrate the applicability and effectiveness of the proposed FDAC and PDAC systems in various scenarios. While diverse case studies are introduced, they are not exhaustive. Instead, they serve to illustrate the fundamental principles of the proposed approaches and their robustness under different conditions, including varying dynamics and communication constraints. The results obtained from these sample cases are consistent with the theoretical analysis presented in the paper, which establishes the stability and convergence properties of the FDAC and PDAC methods. The mathematical proofs and derivations provided in the paper underpin the generalizability of our findings, indicating that the proposed methods are not limited to the specific examples presented but are applicable to a broader class of distributed systems. It is acknowledged that additional examples would strengthen the empirical validation of our findings. The extension of analyses can be planned by incorporating a wider range of scenarios, including different network topologies, varying agent dynamics, and more complex communication patterns. This will provide further evidence of the effectiveness of the proposed methods in solving the distributed identification problem. While the examples serve as a foundation for the claims raised, the theoretical framework and stability proofs are critical components that support the conclusions that have been attained. It is realized that the combination of practical examples and rigorous theoretical analysis provides a solid basis for asserting that this study contributes to solving the identification problem in distributed systems.

Example 1. In this example, the leader acceleration and unknown nonlinear dynamics of each follower are given as

In this example, we consider the structure of the $$u_0$$ and $$f_i,\ i=1,\ldots,4$$ is known, so the basis functions are considered as $$\bm{\phi}_0=\bm{\phi}_4=[\sin2t\,\,\,\,\cos2t]^T,\bm{\phi}_1=\bm{\phi}_3=[\mathrm{sin}\,t\,\,\,\,\mathrm{cos}\,t]^T,\bm{\phi}_2=[\sin3t\,\,\,\,\cos3t]^T$$. Based on the PDAC method, the controller parameters assumed to be $$a=5,\ b=3,\ \alpha=5,\ \beta=4,\ \gamma=2$$. shows the simulation result of the controller based on the PDAC method without adaptive term compensator. Also, shows the adaptive controller result based on the PDAC method. and show the convergence of the estimated parameter arrays $${\hat{\bm{\theta}}}_{0i},\ {\hat{\bm{\theta}}}_i,\ i=1,\ldots,4$$. and illustrate the convergence behavior of the estimated parameter arrays within the PDAC system over time. Each figure presents the evolution of the parameter estimates for different agents in the multi-agent network as they adapt to the dynamics of the environment. Initially, as depicted in both figures, the estimated parameters exhibit noticeable fluctuations. This behavior is expected in adaptive control systems, particularly in the early stages of operation when agents gather information and adjust their estimates based on local observations and interactions with neighboring agents. These fluctuations reflect the agents’ attempts to respond to various uncertainties and disturbances in the system. After approximately 15 s, a significant change occurs, and the estimated parameters begin to stabilize. This convergence indicates that the PDAC algorithm effectively integrates the leader’s and neighboring agents’ information, allowing the followers to refine their parameter estimates. The stabilization of the estimated parameters signifies that the agents have reached a consensus on the underlying system dynamics, demonstrating the robustness and effectiveness of the PDAC approach. The convergence to constant values in the estimated parameters underscores the adaptability of the PDAC system in achieving consensus among agents, even in the presence of initial uncertainties. This behavior is crucial for ensuring that the multi-agent system can perform effectively in dynamic environments, as it allows the agents to maintain coordinated behavior and achieve collective goals. These figures exemplify the successful application of the PDAC algorithm, showcasing its capability to facilitate the convergence of estimated parameters in a partially distributed manner, thereby enhancing the overall performance of the multi-agent system.

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, obtained from the controller without using adaptive term compensator, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the PDAC method , (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Convergences of the estimated parameter arrays $$\widehat{\boldsymbol{\theta}}_{0i},i=1,...,4$$, based on the PDAC method , (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Convergences of the estimated parameter arrays $$\widehat{\boldsymbol{\theta}}_i,i=1,...,4$$, based on the PDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

Also, shows the simulation results of the adaptive controller based on the FDAC method with the control parameters $$a=5,\ b=3,\ \mu=0.2,\ \beta=4,\ \gamma=2$$. shows the convergences of adaptive gain,

i.e., $$c_i\left(t\right),\ i=1,\ldots,4$$. Also, and show the convergence of the estimated parameters $${\hat{\bm{\theta}}}_{0i},\ {\hat{\bm{\theta}}}_i,\ i=1,\ldots,4$$. and illustrate the dynamic performance of the FDAC system by showing the convergence characteristics of the adaptive gain and the estimated parameter arrays over time. presents the convergence of the adaptive gain utilized by the FDAC algorithm. Initially, it is observed that some fluctuations in the adaptive gains as the agents start to adapt to the system dynamics and the influence of the leader. However, after approximately 5 s, the adaptive gains stabilize and converge to constant values. This rapid convergence indicates the FDAC system’s effectiveness in quickly adjusting the control parameters in response to the varying dynamics of the environment. The stabilization of the adaptive gains reflects the system’s ability to achieve an appropriate balance between responsiveness and stability, ensuring that agents can effectively coordinate their actions while adapting to real-time conditions. depicts the convergence of the estimated parameter arrays for the agents. Initially, the parameter estimates exhibit high oscillations as the agents engage in adaptive processes, reflecting the ongoing adjustments to their understanding of the environment. After about 30 s, these oscillations diminish, and the estimated parameters converge towards constant values. High oscillations during the early adaptation phase suggest that the agents are actively refining their estimates based on local and neighbor information. The eventual convergence to stable parameter values illustrates the FDAC system’s ability to facilitate cooperative learning and parameter estimation among agents, leading to coherent collective behavior. Together, and demonstrate the robustness of the FDAC algorithm in achieving convergence in both adaptive gains and parameter estimates. The quick stabilization of adaptive gains, coupled with the eventual convergence of the parameter arrays, underscores the effectiveness of the fully-distributed approach in managing uncertainty and enabling agents to work in harmony within a multi-agent system.

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the FDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Convergence of the adaptive gain, <i>c<sub>i</sub></i>(<i>t</i>), <i>i</i> = 1,...,4, based on the FDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Convergences of the estimated parameter arrays $$\widehat{\boldsymbol{\theta}}_{0i},i=1,...,4$$, based on the FDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Convergences of the estimated parameter arrays $$\widehat{\boldsymbol{\theta}}_i,i=1,...,4$$, based on the FDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

In this example, the relative position measuring considered being unavailable for any agent; but each agent can measure its absolute position and communicate this with each other. Due to data transmitting speed limitations, each agent receives information from others with a time delay. Consequently, the relative position of each agent relative to its neighbors is given as

The expression (40) can be used in proposed PDAC and FDAC algorithms. The time delay affected the closed loop system’s stability and tracking performance. So, this example shows numerically the effect of time delay on tracking performance and, thus, on the performance of relative positioning with respect to absolute positioning. and show the simulation results of the PDAC and the FDAC methods with controller parameters similar to Example 1 and also $$\tau=0.50$$ s, respectively.

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the PDAC method with delayed communication, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the FDAC method with delayed communication, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

In the Examples 1 and 2, the structure of the $$u_0$$ and $$f_i,\ i=1,\ldots,4$$ is considered to be known. In the Examples 3 and 4, the structure of the $$u_0$$ and $$f_i,\ i=1,\ldots,4$$ is unknown, but is assumed slow-varying. Then, it is reasonable to use the basis function $$\phi_0=\phi_i=1.0$$ in the formulation of the proposed algorithms.

Example 3. (performance evaluation of the controllers for slow-varying discontinuous disturbances): In this example, the leader acceleration is assumed to be a square function, $$u_0\left(t\right)=5.0\times square\left(0.05t\right)$$ as shown in . For analysis of the simplicity of controller performance, the $$f_i\left(t\right)=0,\ i=1,\ldots,4$$ assumed. shows the simulation results of the PDAC method with controller parameters $$a=5, b=2, \alpha=10,\beta=4, \gamma=2$$. Also, shows the result of the FDAC method with the controller parameters $$ a=5,b=2,\mu=0.1, \beta=4,\gamma=2$$.

. Leader acceleration, <i>u</i><sub>0</sub> = 5.0 × <i>square</i>(0.05<i>t</i>) versus time.

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the PDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the FDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

Similar to Example 3, the unknown dynamics of each follower, $$f_i\left(t\right)$$ assumed to be zero. Also the leader acceleration is considered to be $$u_0\left(t\right)=4.0\times sawtooth\left(0.1t\right)$$ as shown in . and show the simulation results of the PDAC and the FDAC methods with the controller parameters which are similar to Example 3.

. Leader acceleration, $$u_0(t)=4.0\times sawtooth(0.1t)$$ versus time.

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the PDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

. Trajectories of <i>x<sub>i</sub></i> − <i>x</i><sub>0</sub> and <i>v<sub>i</sub></i> − <i>v</i><sub>0</sub>, <i>i</i> = 1,…,4, based on the FDAC method, (Black: follower agent # 1, Blue: follower agent # 2, Red: follower agent # 3, Green: follower agent agent # 4).

The present study focused on establishing the theoretical foundations underpinning the FDAC and PDAC methods through Lyapunov-based analysis and stability proofs. The theoretical theorems and their associated proofs provide a robust basis for understanding the performance of the FDAC and PDAC algorithms in the context of the undirected graph communication topology. While the current theoretical analysis emphasizes the collective system behavior, it should be noted that a more detailed examination of individual components could further enhance the understanding of the dynamics involved as follows. As the proposed strategies utilize global information about the graph topology differently in the FDAC and PDAC methods, it can be concluded that an in-depth theoretical study on how these methods interact with various individual graph structures and their respective equations would be beneficial for strengthening the obtained results. This could involve analyzing how specific graph properties (e.g., connectivity, degree distributions) affect the performance of each algorithm on a granular level. According to simulations, it can be recognized that investigating various graph configurations, analyzing the control strategies concerning distinct types of inter-agent communication failures, and understanding how these factors can be integrated into the theoretical framework lead to a more comprehensive understanding. The numerical simulations conducted in this study involve a scenario with four follower agents and one leader governed by a defined communication topology represented by the matrix H. The aim is to evaluate the effectiveness of the FDAC and PDAC methods in facilitating consensus among agents with second-order dynamics, under varying conditions. In the Example 1, both the leader’s acceleration and the unknown nonlinear dynamics of each follower agent are predetermined. For this simulation, it was assumed that the structure of the control input $$u_0$$ and the follower dynamics are known, allowing utilizing basis functions appropriately. illustrates the simulation results of the PDAC method implemented without an adaptive term compensator. This setup demonstrates the initial performance of the followers in tracking the leader’s acceleration, highlighting the steady-state behavior after some transient response. shows the results when the adaptive controller is employed based on the PDAC method. The incorporation of the adaptive compensator significantly enhances the convergence behavior, as the follower agents can adjust their control efforts to account for discrepancies in the leader’s acceleration. and display the convergence of the estimated parameter arrays, showcasing how quickly and accurately the follower agents are able to adapt their parameters to match the leader’s dynamics over time. presents the simulation results for the adaptive controller based on the FDAC method, where all controller parameters are assumed to be known. This visualization underscores the decentralized approach and its ability to maintain consensus among agents. depicts the convergence of the adaptive gain for the FDAC method, emphasizing the method’s robustness in adjusting gains in real-time. and highlight the convergence of the estimated parameters, further supporting the effectiveness of the FDAC in achieving the desired control objectives. In the Example 2, it was explored a more complex scenario where none of the followers can directly measure their relative positions. Instead, each agent can only measure its absolute position and communicate this information with its neighbors. Due to limitations in data transmission speeds, time delays are introduced, causing each agent to receive information from others with a known delay τ = 0.50 s. These time delays’ implications on stability and tracking performance are rigorously analyzed. and display the simulation results for the PDAC and FDAC methods under this framework, illustrating how delays influence the system’s response and overall effectiveness in maintaining a coordinated state. For Examples 3 and 4, it was assumed that the structures of $$u_0$$ and the follower dynamics are unknown, but we posit they are slow-varying. This allows us to apply the basis functions effectively to approximate the dynamics. In Example 3, the leader’s acceleration is modeled as a square function, with the follower dynamics assumed zero for simplifying the analysis. exhibits the simulation results for the PDAC method under these assumptions, showcasing its performance against the leader’s trajectory. FDAC in Unknown Conditions: Similarly, displays the results for the FDAC method, reaffirming its capability to perform effectively even when the agent dynamics are subject to uncertainty. and delve deeper into the results of Examples 3 and 4, comparing the performance metrics of both control strategies. The findings indicate that the FDAC method consistently exhibits superior tracking performance compared to the PDAC method. The comparative analysis between FDAC and PDAC reveals critical insights:

Decentralization: The FDAC method’s decentralized nature allows for a more flexible and robust response to disturbances and uncertainties, as it does not rely on global information.

Adaptive Gain Determination: The FDAC method employs an adaptive mechanism for controller gain determination, thus optimizing the control responses in real-time. In contrast, the PDAC method utilizes a fixed gain configuration, which can limit performance in dynamically changing environments.

The simulation results affirm that both methods provide viable solutions for leader-follower consensus. However, the FDAC method demonstrates a marked advantage in adaptability and robustness, particularly in scenarios involving unknown dynamics and communication delays.

According to the results obtained from the Examples 3 and 4, the FDAC method shows better tracking performance relative to the PDAC method. In summary, the main reasons for justification of this claim are:

- FDAC is completely decentralized, while PDAC is not.

- Adaptively determination of the controller gain in the FDAC method while in the PDAC method, the controller gain is chosen primitively.

5. Conclusions

The objective of this study was to solve the problem of distributed leader-follower consensus of a group of agents with the second-order dynamics under the undirected graph communication topology. When the leader’s acceleration is not communicated to each follower, and the follower agents have some unknown dynamics in their intrinsic structure, implementing the proposed method practically results in delayed and faulty network control. To address this problem, a linear regression model is assumed for the leader’s acceleration and the agents’ unknown dynamics. Using this model, Lyapunov-based adaptive control algorithms are developed to manage the network of agents despite communication loss and modeling uncertainties. The presented study describes two multi-agent control strategies called FDAC and PDAC systems. In the first method, the followers do not have any a priori information about the communication graph, while in the second method, some information about the eigenvalues of the communication graph is available. Illustrative simulations were conducted to show the merits and efficiencies of the proposed algorithms relative to the case in which enough communication between leader and followers is available.

Future Studies

The future research associated with the present article can be summarized as follows:

- Future research could explore the leader-follower consensus problem in systems with higher-order dynamics, which may introduce additional complexities in control design and stability analysis.

- Investigating the effects of external noise and disturbances on the leader-follower consensus system would be crucial. Developing robust control strategies that maintain consensus under such conditions could enhance the practical applicability of the proposed methods.

- Future studies could aim to develop algorithms that allow for decentralized information gathering regarding the communication graph, enabling followers to adapt more dynamically to changes in the network topology.

- Examining the scalability of both FDAC and PDAC methods in larger multi-agent systems could provide insights into the limitations and performance trade-offs of the proposed algorithms as the number of agents increases.

- Implementing the proposed algorithms in real-world multi-agent systems, such as autonomous vehicles or robotic networks, would validate their performance and adaptability in practice, highlighting any practical challenges.

- Investigating the dynamics of multi-leader systems where multiple leaders influence their respective follower groups could broaden the applicability of the consensus algorithms, allowing for more complex coordination tasks.

- Analyzing the impact of time-varying communication topologies on consensus convergence could yield more flexibility and robustness in dynamic environments.

Author Contributions

B.T.: Conceptualization, Methodology, Validation, Formal analysis, Investigation, Writing—Original Draft, Writing—Review & Editing, Supervision. M.R.H.: Methodology, Software, Validation, Formal analysis, Investigation, Writing—Original Draft, Writing—Review & Editing, Visualization.

Ethics Statement

The authors of the article have adhered to the terms and conditions of ethical publications.

Informed Consent Statement

This study does not require human data or tests.

Funding

This research was not supported by any funding agency and was conducted based on authors personal interest.

Declaration of Competing Interest

This study includes not potential conflict of interest.

References

1.

Xu B. An Integrated Fault-Tolerant Model Predictive Control Framework for UAV Systems.Doctoral Dissertation, University of Victoria, Victoria, BC, Canada, 2024.

2.

Jadbabaie A, Lin J, Morse AS. Coordination of groups of mobile autonomous agents using nearest neighbor rules.

IEEE Trans. Autom. Control. 2003,

48, 988–1001.

[Google Scholar]

3.

Xu C, Deng Z, Liu J, Huang C, Hang P. Towards Safe and Robust Autonomous Vehicle Platooning: A Self-Organizing Cooperative Control Framework. arXiv 2024, arXiv:2408.09468.

4.

Hu Y, Lin X, Peng K. Distributed Consensus Filtering Over Sensor Networks with Asynchronous Measurements.

Int. J. Adapt. Control. Signal Process. 2024,

in press, doi:10.1002/acs.3924..

[Google Scholar]

5.

He T, Goh HH, Yew WK, Kurniawan TA, Goh KC. Enhancing multi-agent system coordination: Fixed-time and event-triggered control mechanism for robust distributed consensus. Ain Shams Eng. J. 2024, 103105, in press. doi:10.1016/j.asej.2024.103105.

6.

Thattai K. Hierarchical Multi-Agent Deep Reinforcement Learning Based Energy Management System for Active Distribution Networks. UNSW Research. Doctoral Dissertation, UNSW Sydney, Kensington, Australia, 2024.

7.

Munir M, Khan Q, Ullah S, Syeda TM, Algethami AA. Control Design for Uncertain Higher-Order Networked Nonlinear Systems via an Arbitrary Order Finite-Time Sliding Mode Control Law.

Sensors 2022,

22, 2748.

[Google Scholar]

8.

Wen B, Huang J. Leader-Following Formation Tracking Control of Nonholonomic Mobile Robots Considering Collision Avoidance: A System Transformation Approach.

Appl. Sci. 2022,

12, 1257.

[Google Scholar]

9.

Ren Y, Liu S, Li D, Zhang D, Lei T, Wang L. Model-free adaptive consensus design for a class of unknown heterogeneous nonlinear multi-agent systems with packet dropouts.

Sci. Rep. 2024,

14, 23093.

[Google Scholar]

10.

Yi Z, Jiang M. Attitude collaborative control strategy for space gravitational wave detection.

Syst. Sci. Control. Eng. 2024,

12, 2368661.

[Google Scholar]

11.

Martinelli A, Aboudonia A, Lygeros J. Interconnection of (Q, S, R)-Dissipative Systems in Discrete Time. 2024. Available online: https://www.researchgate.net/profile/Andrea-Martinelli-7/publication/381671295_Interconnection_of_QSR-Dissipative_Systems_in_Discrete_Time/links/667ab7378408575b838a6717/Interconnection-of-Q-S-R-Dissipative-Systems-in-Discrete-Time.pdf (accessed on 3 August 2024).

12.

Chen R-Z, Li Y-X, Hou ZS. Distributed model-free adaptive control for multi-agent systems with external disturbances and DoS attacks.

Inf. Sci. 2022,

613, 309–323.

[Google Scholar]

13.

Zhang W, Yan J. Finite-Time Distributed Cooperative Guidance Law with Impact Angle Constraint.

Int. J. Aerosp. Eng. 2023,

2023, 5568394.

[Google Scholar]

14.

Li Y, Zhao H, Wang C, Huang J, Li K, Lei Z. A Novel Fuzzy Adaptive Consensus Algorithm for General Strict-feedback Nonlinear Multi-agent Systems Using Backstepping Approaches. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 5829–5836.

15.

Kim Y, Ye H, Lim S, Kim SK. Observer-Based Nonlinear Proportional–Integral–Integral Speed Control for Servo Drive Applications via Order Reduction Technique.

Actuators 2023,

13, 2.

[Google Scholar]

16.

Kenyeres M, Kenyeres J. Distributed Network Size Estimation Executed by Average Consensus Bounded by Stopping Criterion for Wireless Sensor Networks. In Proceedings of the 2019 International Conference on Applied Electronics (AE), Pilsen, Czech Republic, 10–11 September 2019; pp. 1–6.

17.

Wang Y, Cheng Z, Xiao M. UAVs’ Formation Keeping Control Based on Multi–Agent System Consensus.

IEEE Access 2020,

8, 49000–49012.

[Google Scholar]

18.

Zhang Y, Zhao Z, Ma S. Adaptive neural consensus tracking control for singular multi-agent systems against actuator attacks under jointly connected topologies. Nonlinear Dyn. 2024, doi:10.1007/s11071-024-10418-z.

19.

Boyd S, Ghaoui LE, Feron E, Balakrishnan V. Linear Matrix Inequalities in System and Control Theory; SIAM: Philadelphia, PA, USA, 1994.

20.

Slotine JJE, Li W. Applied Nonlinear Control; Prentice Hall: Upper Saddle River, NJ, USA, 1991.

21.

Marino R, Tomei P. Nonlinear Control Design-Geometric, Adaptive and Robust; Prentice Hall: London, UK, 1995.

22.

Gu H, Lü J, Lin Z. On PID control for synchronization of complex dynamical network with delayed nodes.

Sci. China Technol. Sci. 2019,

62, 1412–1422.

[Google Scholar]